-

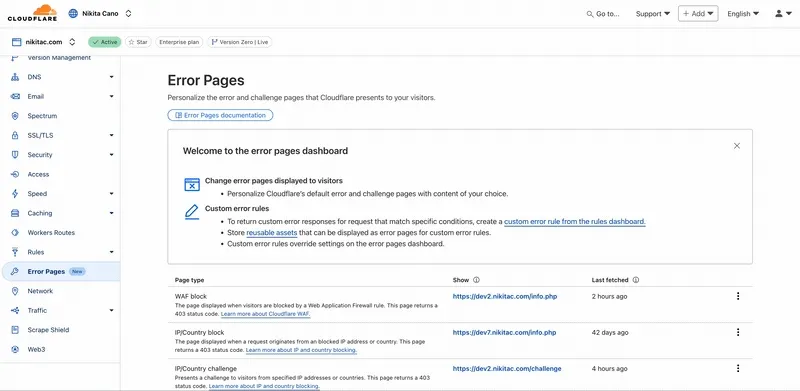

Custom Errors are now generally available for all paid plans — bringing a unified and powerful experience for customizing error responses at both the zone and account levels.

You can now manage Custom Error Rules, Custom Error Assets, and redesigned Error Pages directly from the Cloudflare dashboard. These features let you deliver tailored messaging when errors occur, helping you maintain brand consistency and improve user experience — whether it’s a 404 from your origin or a security challenge from Cloudflare.

What's new:

- Custom Errors are now GA – Available on all paid plans and ready for production traffic.

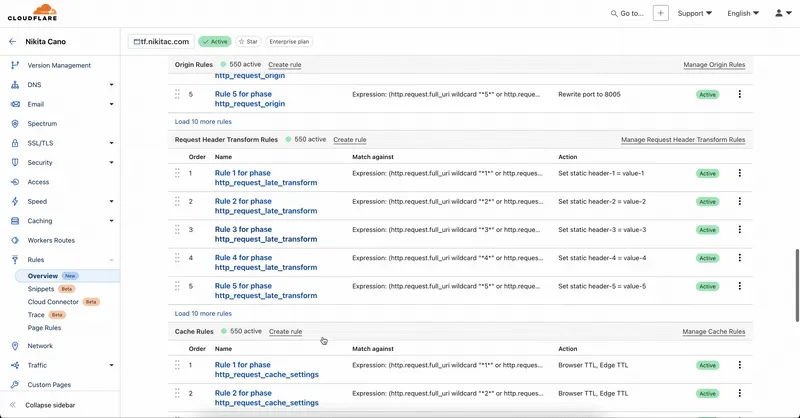

- UI for Custom Error Rules and Assets – Manage your zone-level rules from the Rules > Overview and your zone-level assets from the Rules > Settings tabs.

- Define inline content or upload assets – Create custom responses directly in the rule builder, upload new or reuse previously stored assets.

- Refreshed UI and new name for Error Pages – Formerly known as “Custom Pages,” Error Pages now offer a cleaner, more intuitive experience for both zone and account-level configurations.

- Powered by Ruleset Engine – Custom Error Rules support conditional logic and override Error Pages for 500 and 1000 class errors, as well as errors originating from your origin or other Cloudflare products. You can also configure Response Header Transform Rules to add, change, or remove HTTP headers from responses returned by Custom Error Rules.

Learn more in the Custom Errors documentation.

-

You can now create Python Workers which are executed via a cron trigger.

This is similar to how it's done in JavaScript Workers, simply define a scheduled event listener in your Worker:

from workers import handler@handlerasync def on_scheduled(event, env, ctx):print("cron processed")Define a cron trigger configuration in your Wrangler configuration file:

{"triggers": {"crons": ["*/3 * * * *","0 15 1 * *","59 23 LW * *"]}}[triggers]# Schedule cron triggers:# - At every 3rd minute# - At 15:00 (UTC) on first day of the month# - At 23:59 (UTC) on the last weekday of the monthcrons = [ "*/3 * * * *", "0 15 1 * *", "59 23 LW * *" ]Then test your new handler by using Wrangler with the

--test-scheduledflag and making a request to/cdn-cgi/handler/scheduled?cron=*+*+*+*+*:Terminal window npx wrangler dev --test-scheduledcurl "http://localhost:8787/cdn-cgi/handler/scheduled?cron=*+*+*+*+*"Consult the Workers Cron Triggers page for full details on cron triggers in Workers.

-

You can now filter AutoRAG search results by

folderandtimestampusing metadata filtering to narrow down the scope of your query.This makes it easy to build multitenant experiences where each user can only access their own data. By organizing your content into per-tenant folders and applying a

folderfilter at query time, you ensure that each tenant retrieves only their own documents.Example folder structure:

Terminal window customer-a/logs/customer-a/contracts/customer-b/contracts/Example query:

const response = await env.AI.autorag("my-autorag").search({query: "When did I sign my agreement contract?",filters: {type: "eq",key: "folder",value: "customer-a/contracts/",},});You can use metadata filtering by creating a new AutoRAG or reindexing existing data. To reindex all content in an existing AutoRAG, update any chunking setting and select Sync index. Metadata filtering is available for all data indexed on or after April 21, 2025.

If you are new to AutoRAG, get started with the Get started AutoRAG guide.

-

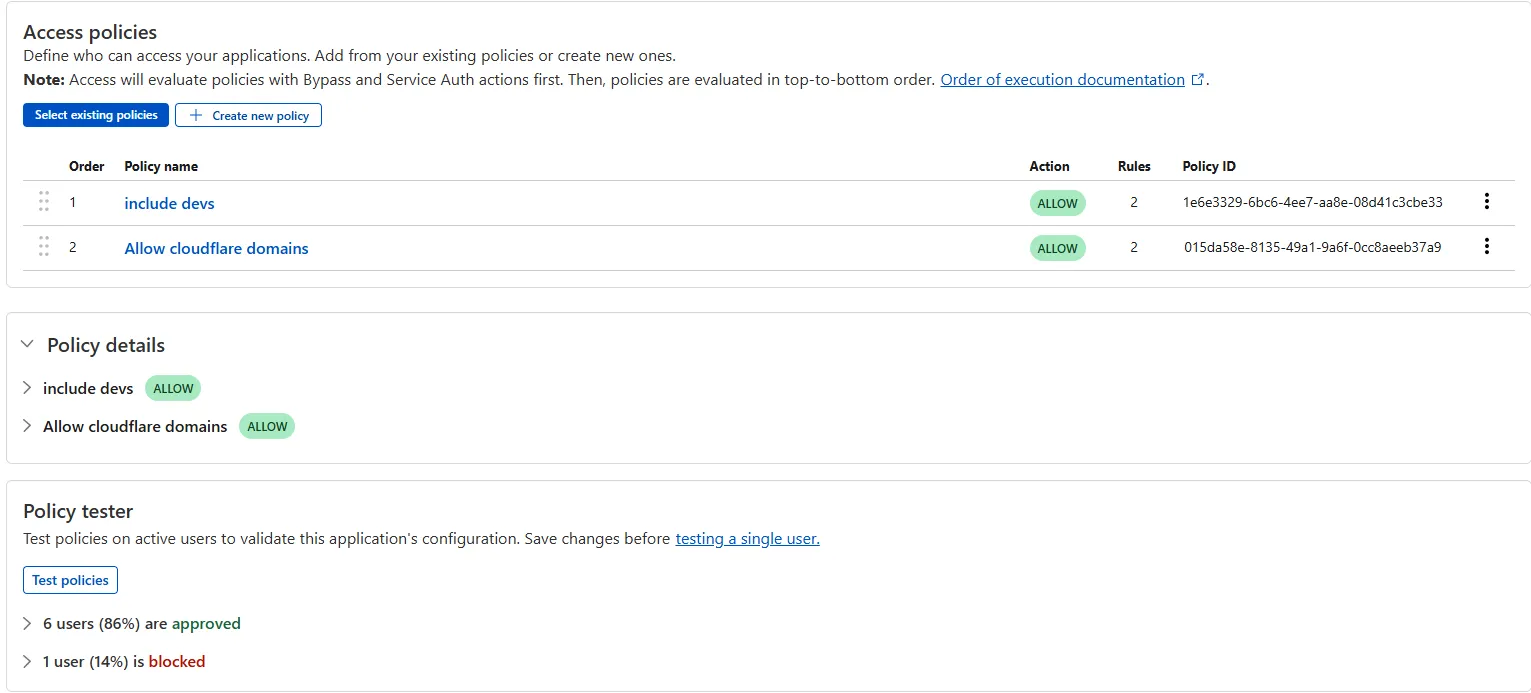

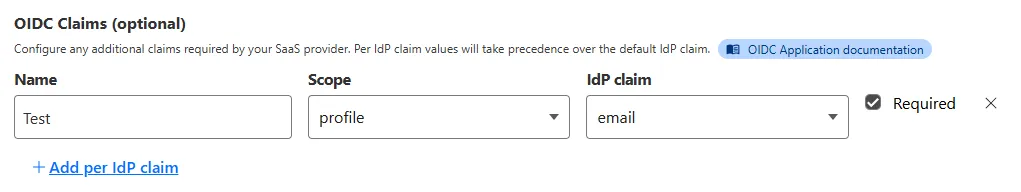

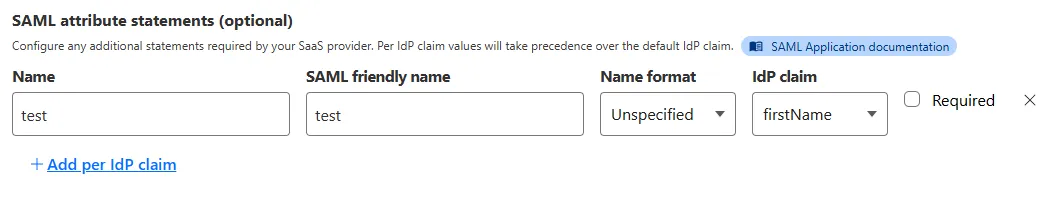

The Access bulk policy tester is now available in the Cloudflare Zero Trust dashboard. The bulk policy tester allows you to simulate Access policies against your entire user base before and after deploying any changes. The policy tester will simulate the configured policy against each user's last seen identity and device posture (if applicable).

-

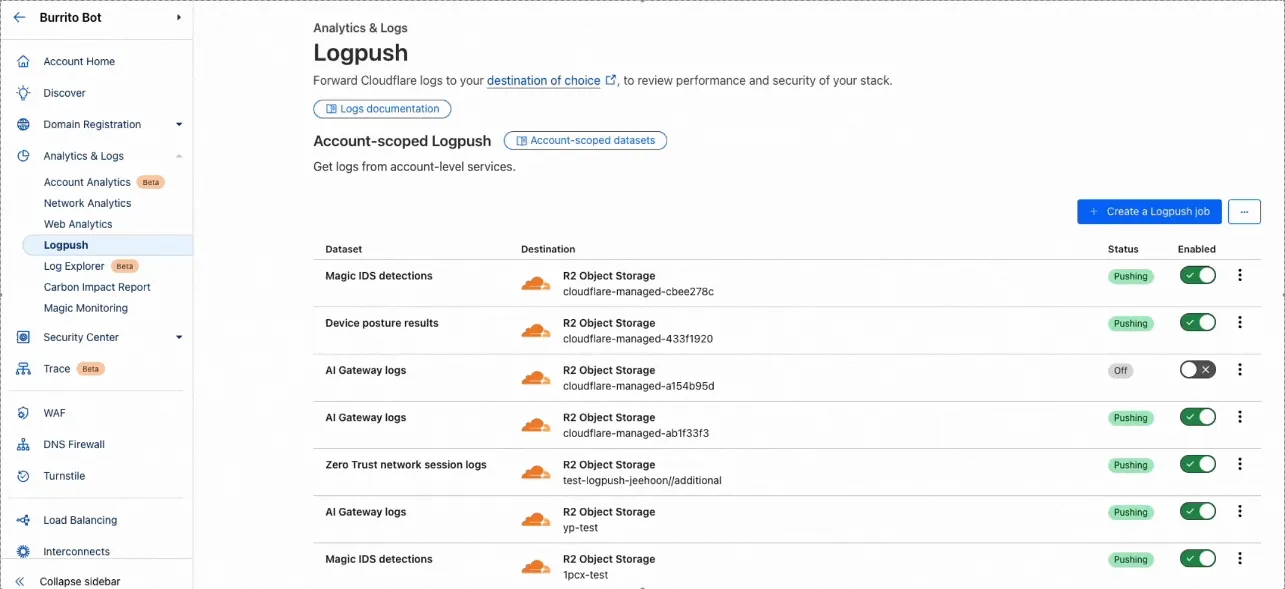

Custom Fields now support logging both raw and transformed values for request and response headers in the HTTP requests dataset.

These fields are configured per zone and apply to all Logpush jobs in that zone that include request headers, response headers. Each header can be logged in only one format—either raw or transformed—not both.

By default:

- Request headers are logged as raw values

- Response headers are logged as transformed values

These defaults can be overidden to suit your logging needs.

For more information refer to Custom fields documentation

-

You can now retrieve up to 100 keys in a single bulk read request made to Workers KV using the binding.

This makes it easier to request multiple KV pairs within a single Worker invocation. Retrieving many key-value pairs using the bulk read operation is more performant than making individual requests since bulk read operations are not affected by Workers simultaneous connection limits.

// Read single keyconst key = "key-a";const value = await env.NAMESPACE.get(key);// Read multiple keysconst keys = ["key-a", "key-b", "key-c", ...] // up to 100 keysconst values : Map<string, string?> = await env.NAMESPACE.get(keys);// Print the value of "key-a" to the console.console.log(`The first key is ${values.get("key-a")}.`)Consult the Workers KV Read key-value pairs API for full details on Workers KV's new bulk reads support.

-

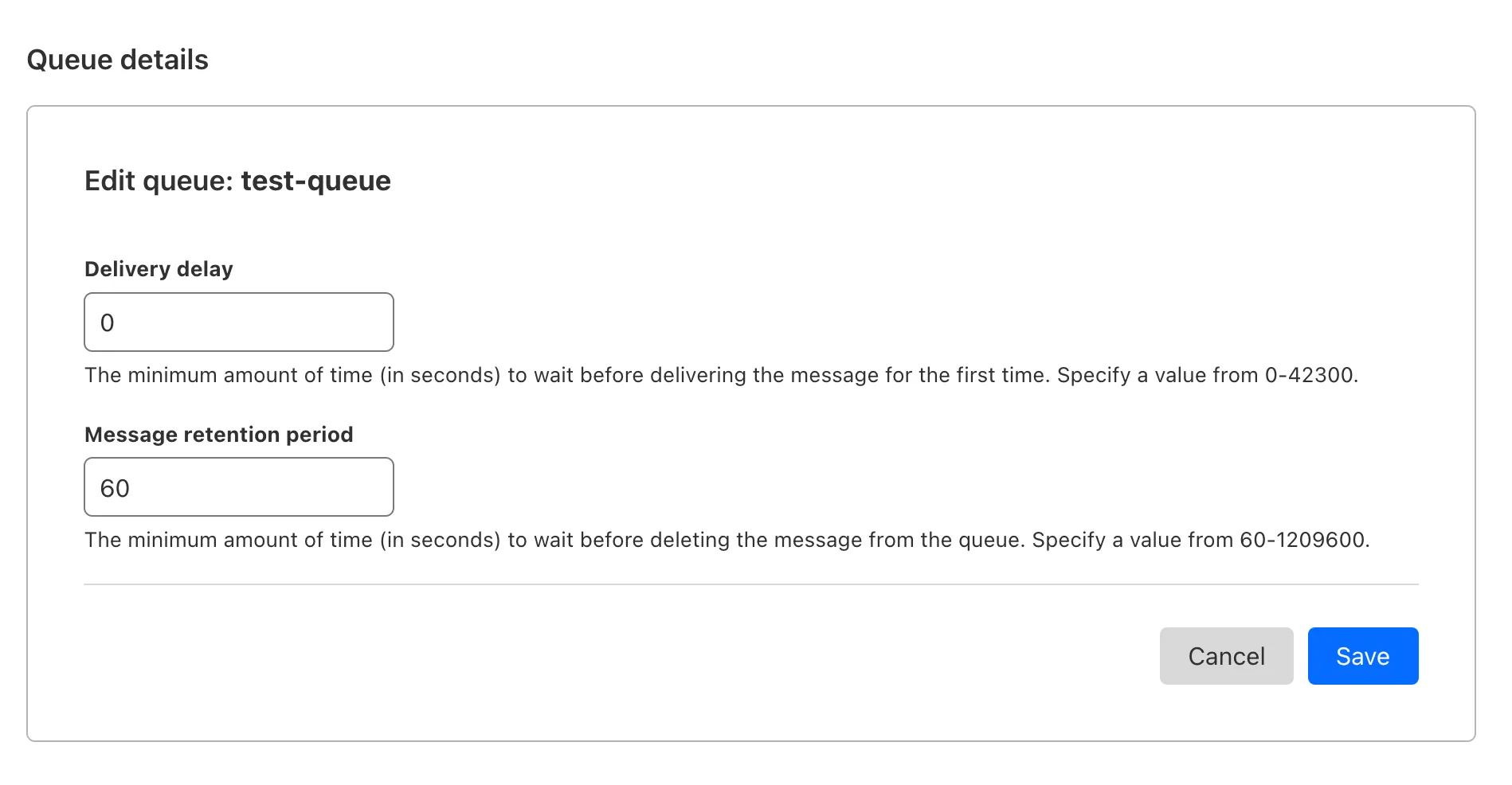

Queues pull consumers can now pull and acknowledge up to 5,000 messages / second per queue. Previously, pull consumers were rate limited to 1,200 requests / 5 minutes, aggregated across all queues.

Pull consumers allow you to consume messages over HTTP from any environment—including outside of Cloudflare Workers. They’re also useful when you need fine-grained control over how quickly messages are consumed.

To setup a new queue with a pull based consumer using Wrangler, run:

Create a queue with a pull based consumer npx wrangler queues create my-queuenpx wrangler queues consumer http add my-queueYou can also configure a pull consumer using the REST API or the Queues dashboard.

Once configured, you can pull messages from the queue using any HTTP client. You'll need a Cloudflare API Token with

queues_readandqueues_writepermissions. For example:Pull messages from a queue curl "https://api.cloudflare.com/client/v4/accounts/${CF_ACCOUNT_ID}/queues/${QUEUE_ID}/messages/pull" \--header "Authorization: Bearer ${API_TOKEN}" \--header "Content-Type: application/json" \--data '{ "visibility_timeout": 10000, "batch_size": 2 }'To learn more about how to acknowledge messages, pull batches at once, and setup multiple consumers, refer to the pull consumer documentation.

As always, Queues doesn't charge for data egress. Pull operations continue to be billed at the existing rate, of $0.40 / million operations. The increased limits are available now, on all new and existing queues. If you're new to Queues, get started with the Cloudflare Queues guide.

-

Workers AI for Developer Week - faster inference, new models, async batch API, expanded LoRA support

Happy Developer Week 2025! Workers AI is excited to announce a couple of new features and improvements available today. Check out our blog ↗ for all the announcement details.

We’re rolling out some in-place improvements to our models that can help speed up inference by 2-4x! Users of the models below will enjoy an automatic speed boost starting today:

@cf/meta/llama-3.3-70b-instruct-fp8-fastgets a speed boost of 2-4x, leveraging techniques like speculative decoding, prefix caching, and an updated inference backend.@cf/baai/bge-small-en-v1.5,@cf/baai/bge-base-en-v1.5,@cf/baai/bge-large-en-v1.5get an updated back end, which should improve inference times by 2x.- With the

bgemodels, we’re also announcing a new parameter calledpoolingwhich can takeclsormeanas options. We highly recommend usingpooling: clswhich will help generate more accurate embeddings. However, embeddings generated with cls pooling are not backwards compatible with mean pooling. For this to not be a breaking change, the default remains as mean pooling. Please specifypooling: clsto enjoy more accurate embeddings going forward.

- With the

We’re also excited to launch a few new models in our catalog to help round out your experience with Workers AI. We’ll be deprecating some older models in the future, so stay tuned for a deprecation announcement. Today’s new models include:

@cf/mistralai/mistral-small-3.1-24b-instruct: a 24B parameter model achieving state-of-the-art capabilities comparable to larger models, with support for vision and tool calling.@cf/google/gemma-3-12b-it: well-suited for a variety of text generation and image understanding tasks, including question answering, summarization and reasoning, with a 128K context window, and multilingual support in over 140 languages.@cf/qwen/qwq-32b: a medium-sized reasoning model, which is capable of achieving competitive performance against state-of-the-art reasoning models, e.g., DeepSeek-R1, o1-mini.@cf/qwen/qwen2.5-coder-32b-instruct: the current state-of-the-art open-source code LLM, with its coding abilities matching those of GPT-4o.

Introducing a new batch inference feature that allows you to send us an array of requests, which we will fulfill as fast as possible and send them back as an array. This is really helpful for large workloads such as summarization, embeddings, etc. where you don’t have a human-in-the-loop. Using the batch API will guarantee that your requests are fulfilled eventually, rather than erroring out if we don’t have enough capacity at a given time.

Check out the tutorial to get started! Models that support batch inference today include:

@cf/meta/llama-3.3-70b-instruct-fp8-fast@cf/baai/bge-small-en-v1.5@cf/baai/bge-base-en-v1.5@cf/baai/bge-large-en-v1.5@cf/baai/bge-m3@cf/meta/m2m100-1.2b

We’ve upgraded our LoRA experience to include 8 newer models, and can support ranks of up to 32 with a 300MB safetensors file limit (previously limited to rank of 8 and 100MB safetensors) Check out our LoRAs page to get started. Models that support LoRAs now include:

@cf/meta/llama-3.2-11b-vision-instruct@cf/meta/llama-3.3-70b-instruct-fp8-fast@cf/meta/llama-guard-3-8b@cf/meta/llama-3.1-8b-instruct-fast(coming soon)@cf/deepseek-ai/deepseek-r1-distill-qwen-32b(coming soon)@cf/qwen/qwen2.5-coder-32b-instruct@cf/qwen/qwq-32b@cf/mistralai/mistral-small-3.1-24b-instruct@cf/google/gemma-3-12b-it

-

You can now use more flexible redirect capabilities in Cloudflare One with Gateway.

- A new Redirect action is available in the HTTP policy builder, allowing admins to redirect users to any URL when their request matches a policy. You can choose to preserve the original URL and query string, and optionally include policy context via query parameters.

- For Block actions, admins can now configure a custom URL to display when access is denied. This block page redirect is set at the account level and can be overridden in DNS or HTTP policies. Policy context can also be passed along in the URL.

Learn more in our documentation for HTTP Redirect and Block page redirect.

-

Cloudflare Stream has completed an infrastructure upgrade for our Live WebRTC beta support which brings increased scalability and improved playback performance to all customers. WebRTC allows broadcasting directly from a browser (or supported WHIP client) with ultra-low latency to tens of thousands of concurrent viewers across the globe.

Additionally, as part of this upgrade, the WebRTC beta now supports Signed URLs to protect playback, just like our standard live stream options (HLS/DASH).

For more information, learn about the Stream Live WebRTC beta.

-

Today, we're launching R2 Data Catalog in open beta, a managed Apache Iceberg catalog built directly into your Cloudflare R2 bucket.

If you're not already familiar with it, Apache Iceberg ↗ is an open table format designed to handle large-scale analytics datasets stored in object storage, offering ACID transactions and schema evolution. R2 Data Catalog exposes a standard Iceberg REST catalog interface, so you can connect engines like Spark, Snowflake, and PyIceberg to start querying your tables using the tools you already know.

To enable a data catalog on your R2 bucket, find R2 Data Catalog in your buckets settings in the dashboard, or run:

Terminal window npx wrangler r2 bucket catalog enable my-bucketAnd that's it. You'll get a catalog URI and warehouse you can plug into your favorite Iceberg engines.

Visit our getting started guide for step-by-step instructions on enabling R2 Data Catalog, creating tables, and running your first queries.

-

Cloudflare Pipelines is now available in beta, to all users with a Workers Paid plan.

Pipelines let you ingest high volumes of real time data, without managing the underlying infrastructure. A single pipeline can ingest up to 100 MB of data per second, via HTTP or from a Worker. Ingested data is automatically batched, written to output files, and delivered to an R2 bucket in your account. You can use Pipelines to build a data lake of clickstream data, or to store events from a Worker.

Create your first pipeline with a single command:

Create a pipeline $ npx wrangler@latest pipelines create my-clickstream-pipeline --r2-bucket my-bucket🌀 Authorizing R2 bucket "my-bucket"🌀 Creating pipeline named "my-clickstream-pipeline"✅ Successfully created pipeline my-clickstream-pipelineId: 0e00c5ff09b34d018152af98d06f5a1xvcName: my-clickstream-pipelineSources:HTTP:Endpoint: https://0e00c5ff09b34d018152af98d06f5a1xvc.pipelines.cloudflare.com/Authentication: offFormat: JSONWorker:Format: JSONDestination:Type: R2Bucket: my-bucketFormat: newline-delimited JSONCompression: GZIPBatch hints:Max bytes: 100 MBMax duration: 300 secondsMax records: 100,000🎉 You can now send data to your pipeline!Send data to your pipeline's HTTP endpoint:curl "https://0e00c5ff09b34d018152af98d06f5a1xvc.pipelines.cloudflare.com/" -d '[{ ...JSON_DATA... }]'To send data to your pipeline from a Worker, add the following configuration to your config file:{"pipelines": [{"pipeline": "my-clickstream-pipeline","binding": "PIPELINE"}]}Head over to our getting started guide for an in-depth tutorial to building with Pipelines.

-

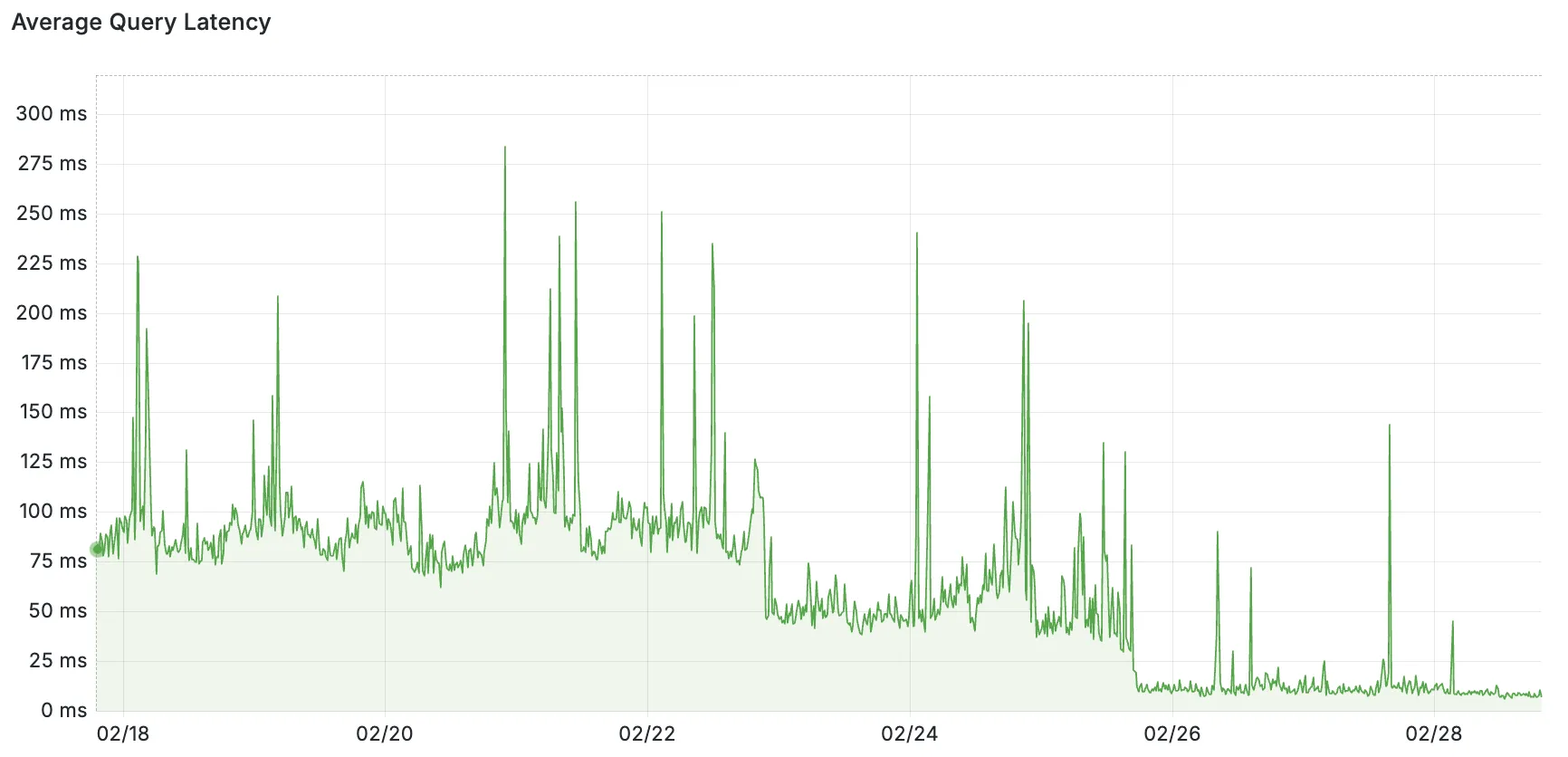

D1 read replication is available in public beta to help lower average latency and increase overall throughput for read-heavy applications like e-commerce websites or content management tools.

Workers can leverage read-only database copies, called read replicas, by using D1 Sessions API. A session encapsulates all the queries from one logical session for your application. For example, a session may correspond to all queries coming from a particular web browser session. With Sessions API, D1 queries in a session are guaranteed to be sequentially consistent to avoid data consistency pitfalls. D1 bookmarks can be used from a previous session to ensure logical consistency between sessions.

// retrieve bookmark from previous session stored in HTTP headerconst bookmark = request.headers.get("x-d1-bookmark") ?? "first-unconstrained";const session = env.DB.withSession(bookmark);const result = await session.prepare(`SELECT * FROM Customers WHERE CompanyName = 'Bs Beverages'`).run();// store bookmark for a future sessionresponse.headers.set("x-d1-bookmark", session.getBookmark() ?? "");Read replicas are automatically created by Cloudflare (currently one in each supported D1 region), are active/inactive based on query traffic, and are transparently routed to by Cloudflare at no additional cost.

To checkout D1 read replication, deploy the following Worker code using Sessions API, which will prompt you to create a D1 database and enable read replication on said database.

To learn more about how read replication was implemented, go to our blog post ↗.

-

Hyperdrive now supports more SSL/TLS security options for your database connections:

- Configure Hyperdrive to verify server certificates with

verify-caorverify-fullSSL modes and protect against man-in-the-middle attacks - Configure Hyperdrive to provide client certificates to the database server to authenticate itself (mTLS) for stronger security beyond username and password

Use the new

wrangler certcommands to create certificate authority (CA) certificate bundles or client certificate pairs:Terminal window # Create CA certificate bundlenpx wrangler cert upload certificate-authority --ca-cert your-ca-cert.pem --name your-custom-ca-name# Create client certificate pairnpx wrangler cert upload mtls-certificate --cert client-cert.pem --key client-key.pem --name your-client-cert-nameThen create a Hyperdrive configuration with the certificates and desired SSL mode:

Terminal window npx wrangler hyperdrive create your-hyperdrive-config \--connection-string="postgres://user:password@hostname:port/database" \--ca-certificate-id <CA_CERT_ID> \--mtls-certificate-id <CLIENT_CERT_ID>--sslmode verify-fullLearn more about configuring SSL/TLS certificates for Hyperdrive to enhance your database security posture.

- Configure Hyperdrive to verify server certificates with

-

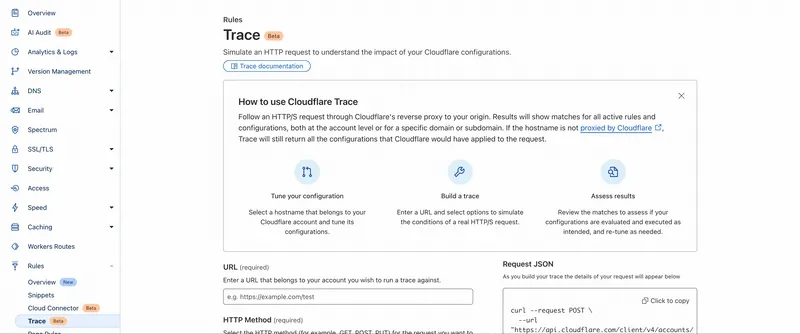

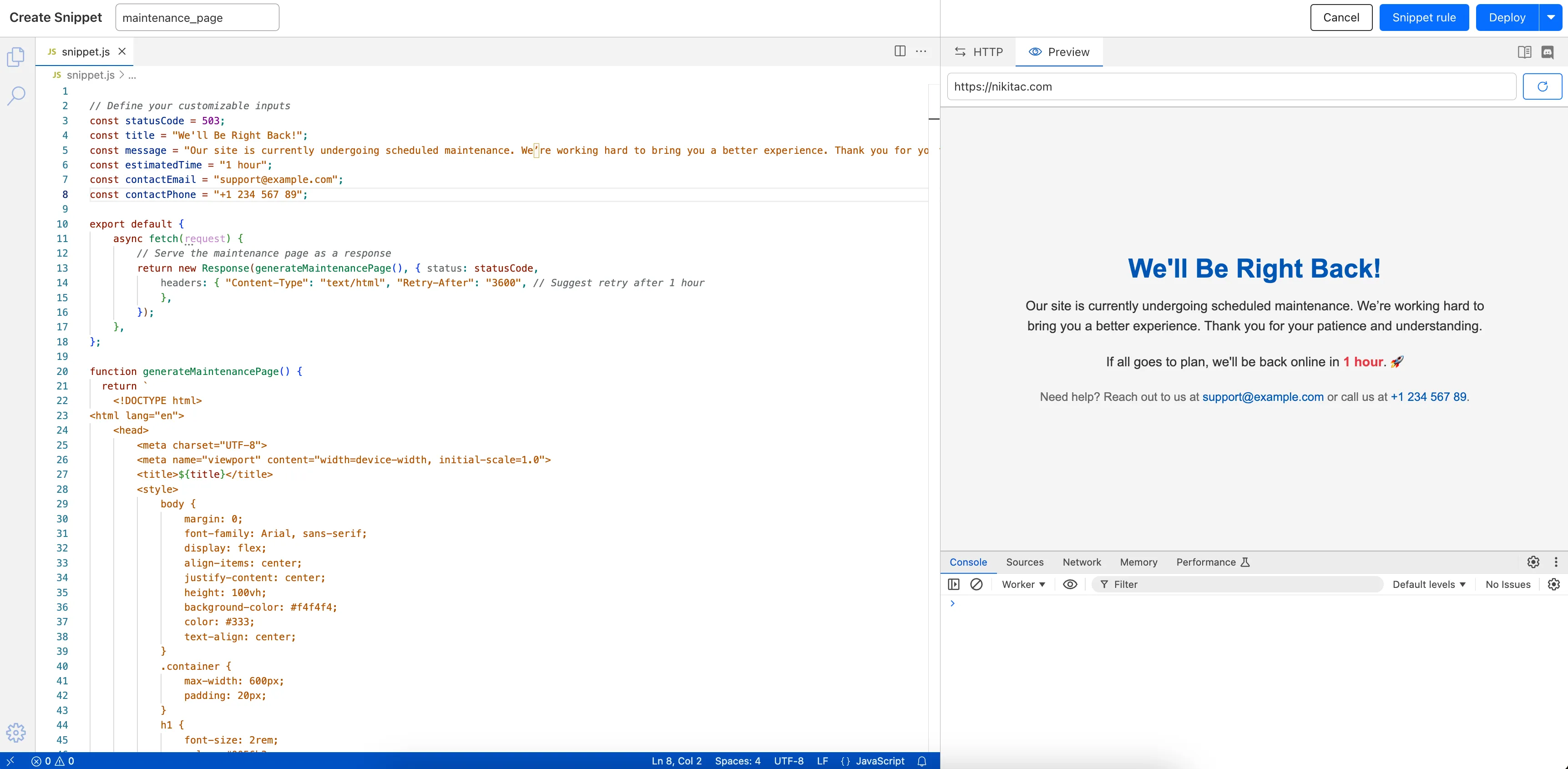

Cloudflare Snippets are now generally available at no extra cost across all paid plans — giving you a fast, flexible way to programmatically control HTTP traffic using lightweight JavaScript.

You can now use Snippets to modify HTTP requests and responses with confidence, reliability, and scale. Snippets are production-ready and deeply integrated with Cloudflare Rules, making them ideal for everything from quick dynamic header rewrites to advanced routing logic.

What's new:

-

Snippets are now GA – Available at no extra cost on all Pro, Business, and Enterprise plans.

-

Ready for production – Snippets deliver a production-grade experience built for scale.

-

Part of the Cloudflare Rules platform – Snippets inherit request modifications from other Cloudflare products and support sequential execution, allowing you to run multiple Snippets on the same request and apply custom modifications step by step.

-

Trace integration – Use Cloudflare Trace to see which Snippets were triggered on a request — helping you understand traffic flow and debug more effectively.

Learn more in the launch blog post ↗.

-

-

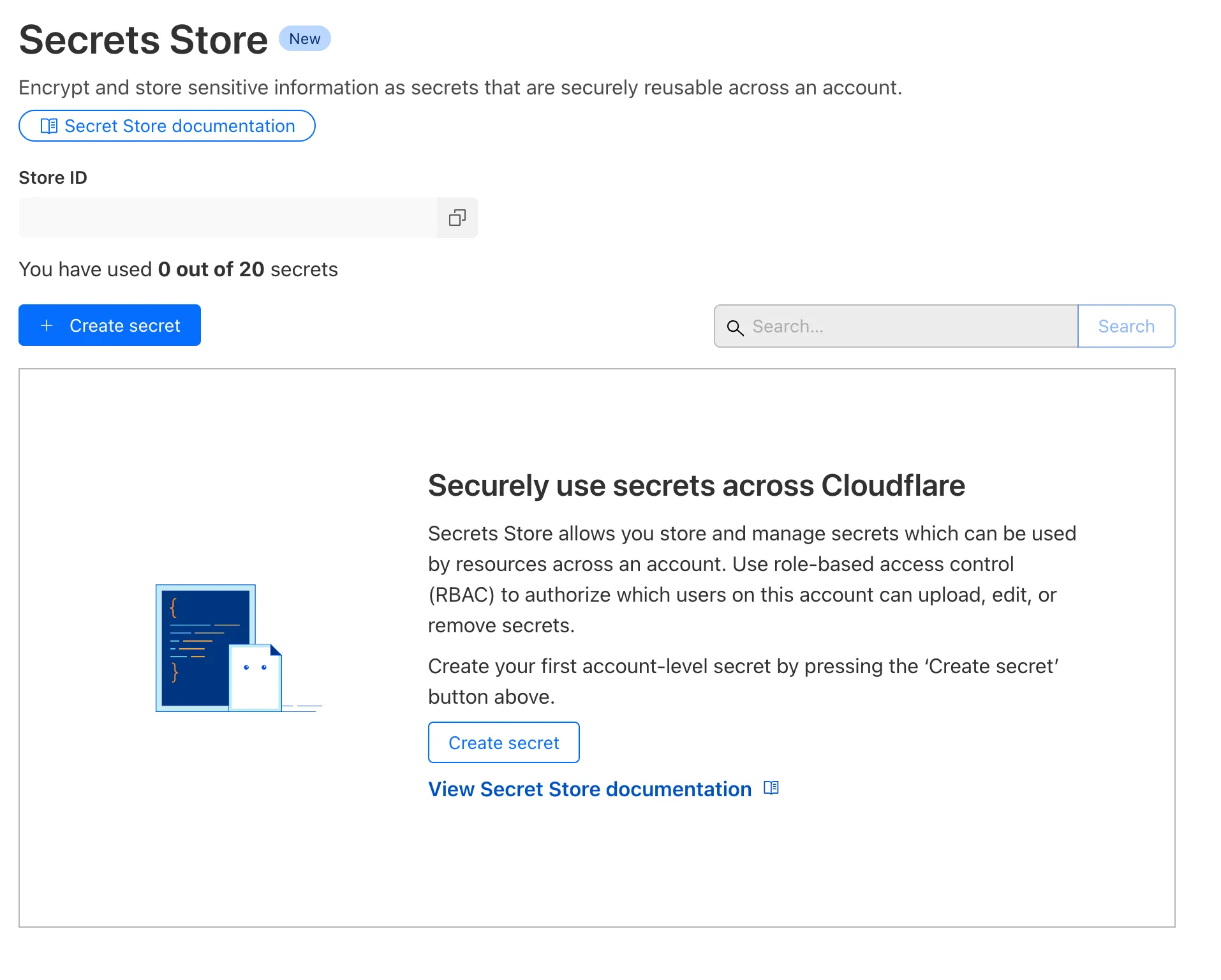

Cloudflare Secrets Store is available today in Beta. You can now store, manage, and deploy account level secrets from a secure, centralized platform to your Workers.

To spin up your Cloudflare Secrets Store, simply click the new Secrets Store tab in the dashboard ↗ or use this Wrangler command:

Terminal window wrangler secrets-store store create <name> --remoteThe following are supported in the Secrets Store beta:

- Secrets Store UI & API: create your store & create, duplicate, update, scope, and delete a secret

- Workers UI: bind a new or existing account level secret to a Worker and deploy in code

- Wrangler: create your store & create, duplicate, update, scope, and delete a secret

- Account Management UI & API: assign Secrets Store permissions roles & view audit logs for actions taken in Secrets Store core platform

For instructions on how to get started, visit our developer documentation.

-

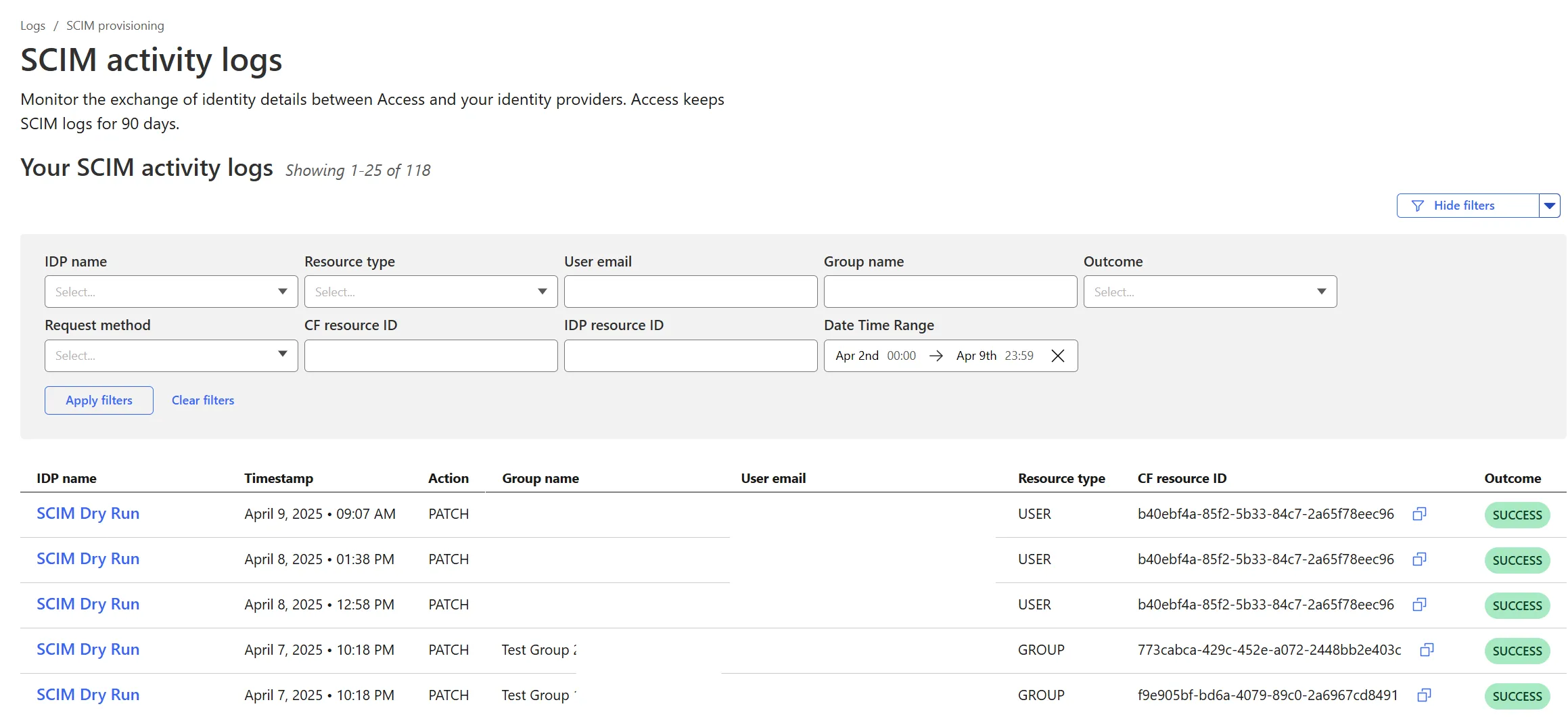

Cloudflare Zero Trust SCIM provisioning now has a full audit log of all create, update and delete event from any SCIM Enabled IdP. The SCIM logs support filtering by IdP, Event type, Result and many more fields. This will help with debugging user and group update issues and questions.

SCIM logs can be found on the Zero Trust Dashboard under Logs -> SCIM provisioning.

-

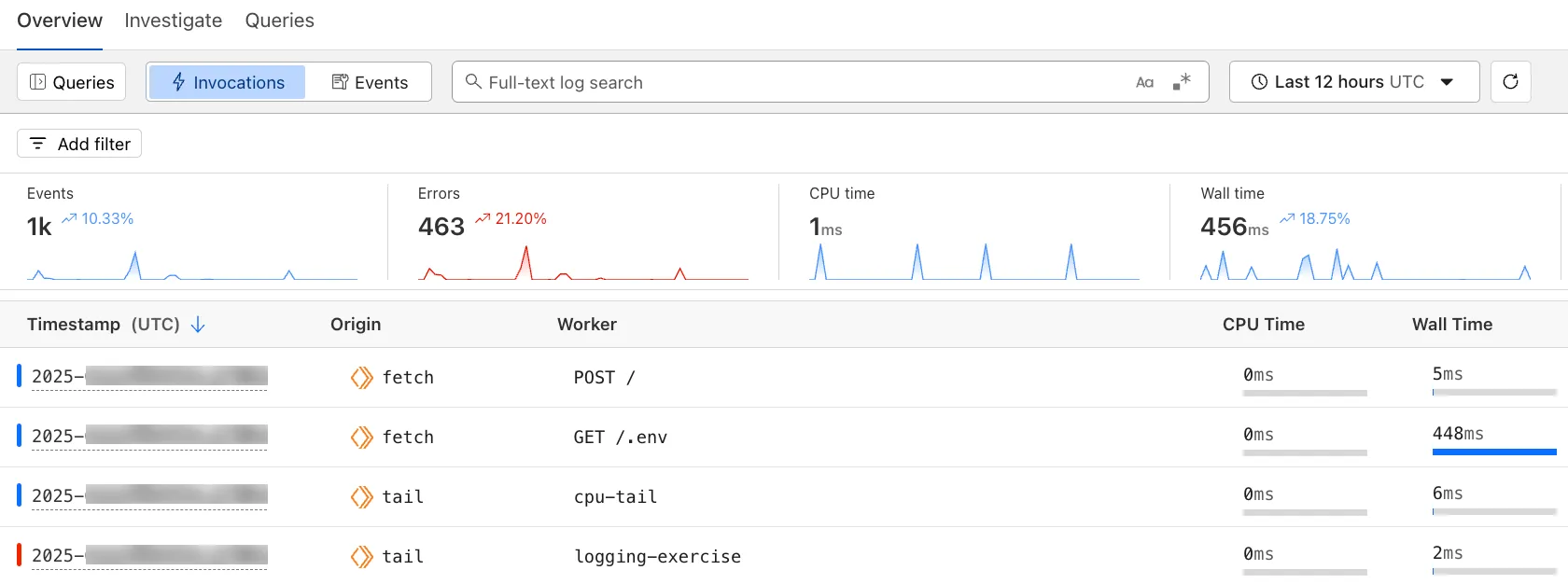

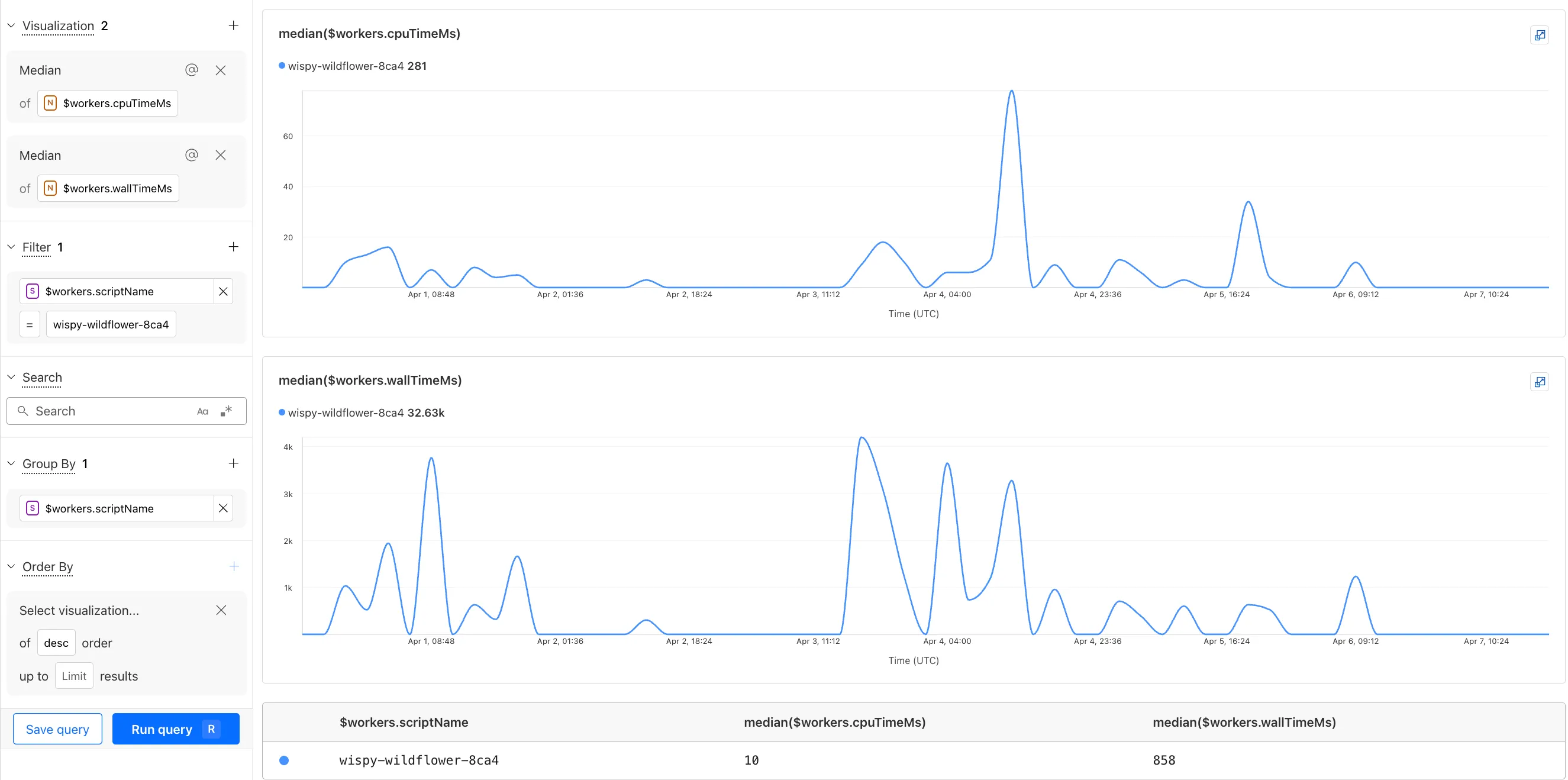

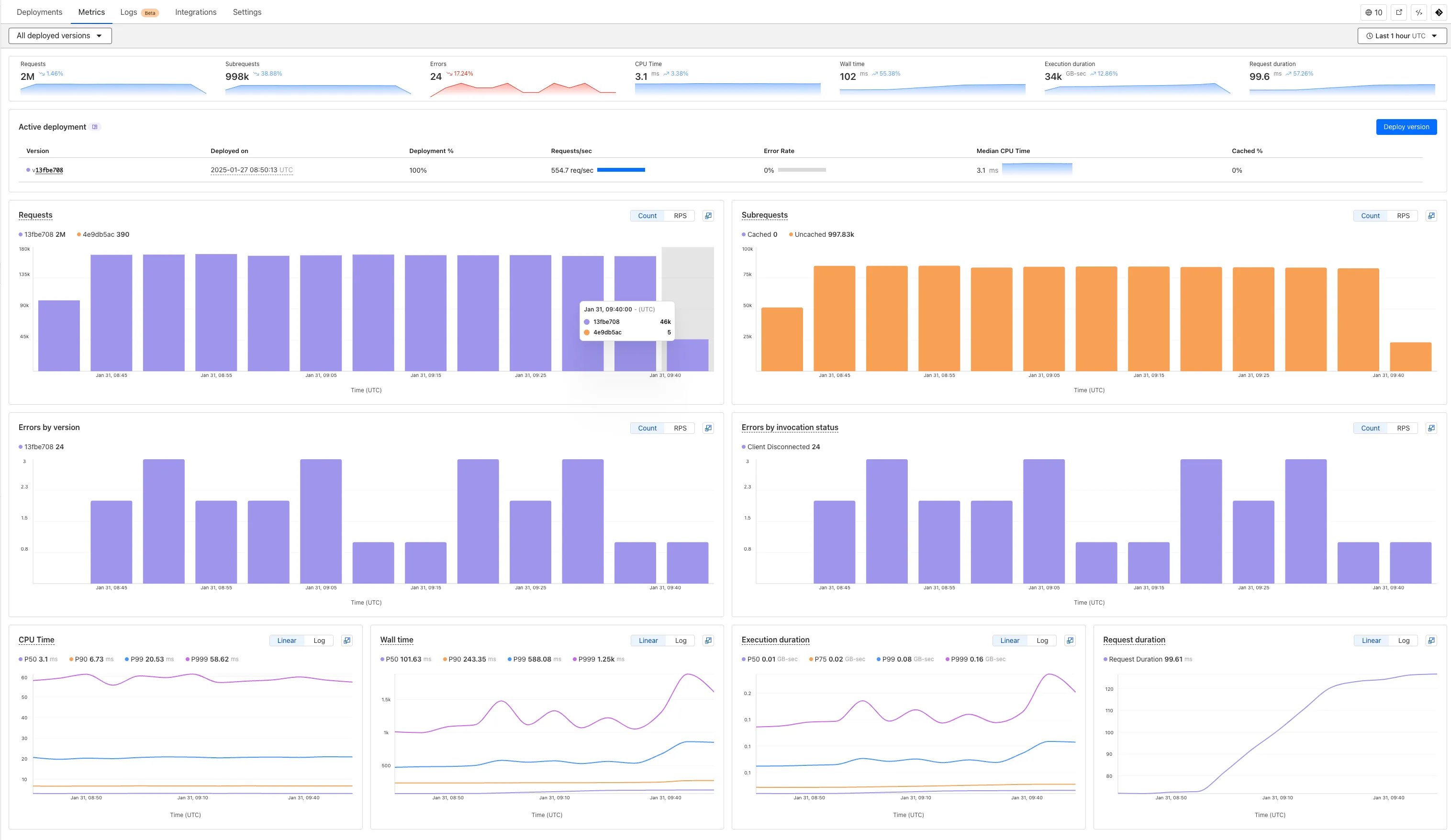

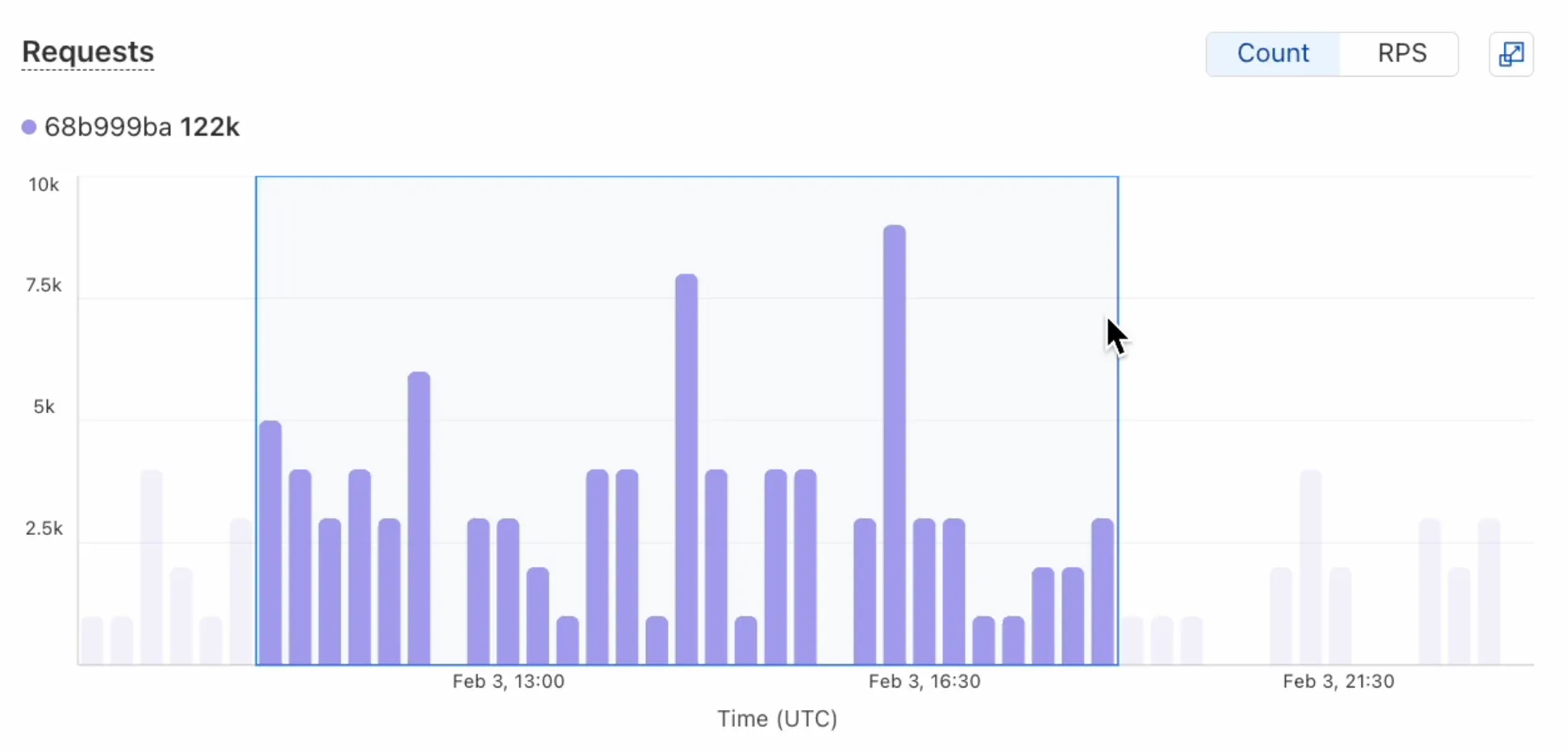

The Workers Observability dashboard ↗ offers a single place to investigate and explore your Workers Logs.

The Overview tab shows logs from all your Workers in one place. The Invocations view groups logs together by invocation, which refers to the specific trigger that started the execution of the Worker (i.e. fetch). The Events view shows logs in the order they were produced, based on timestamp. Previously, you could only view logs for a single Worker.

The Investigate tab presents a Query Builder, which helps you write structured queries to investigate and visualize your logs. The Query Builder can help answer questions such as:

- Which paths are experiencing the most 5XX errors?

- What is the wall time distribution by status code for my Worker?

- What are the slowest requests, and where are they coming from?

- Who are my top N users?

The Query Builder can use any field that you store in your logs as a key to visualize, filter, and group by. Use the Query Builder to quickly access your data, build visualizations, save queries, and share them with your team.

Workers Logs is now Generally Available. With a small change to your Wrangler configuration, Workers Logs ingests, indexes, and stores all logs emitted from your Workers for up to 7 days.

We've introduced a number of changes during our beta period, including:

- Dashboard enhancements with customizable fields as columns in the Logs view and support for invocation-based grouping

- Performance improvements to ensure no adverse impact

- Public API endpoints ↗ for broader consumption

The API documents three endpoints: list the keys in the telemetry dataset, run a query, and list the unique values for a key. For more, visit our REST API documentation ↗.

Visit the docs to learn more about the capabilities and methods exposed by the Query Builder. Start using Workers Logs and the Query Builder today by enabling observability for your Workers:

{"observability": {"enabled": true,"logs": {"invocation_logs": true,"head_sampling_rate": 1}}}[observability]enabled = true[observability.logs]invocation_logs = truehead_sampling_rate = 1 # optional. default = 1.

-

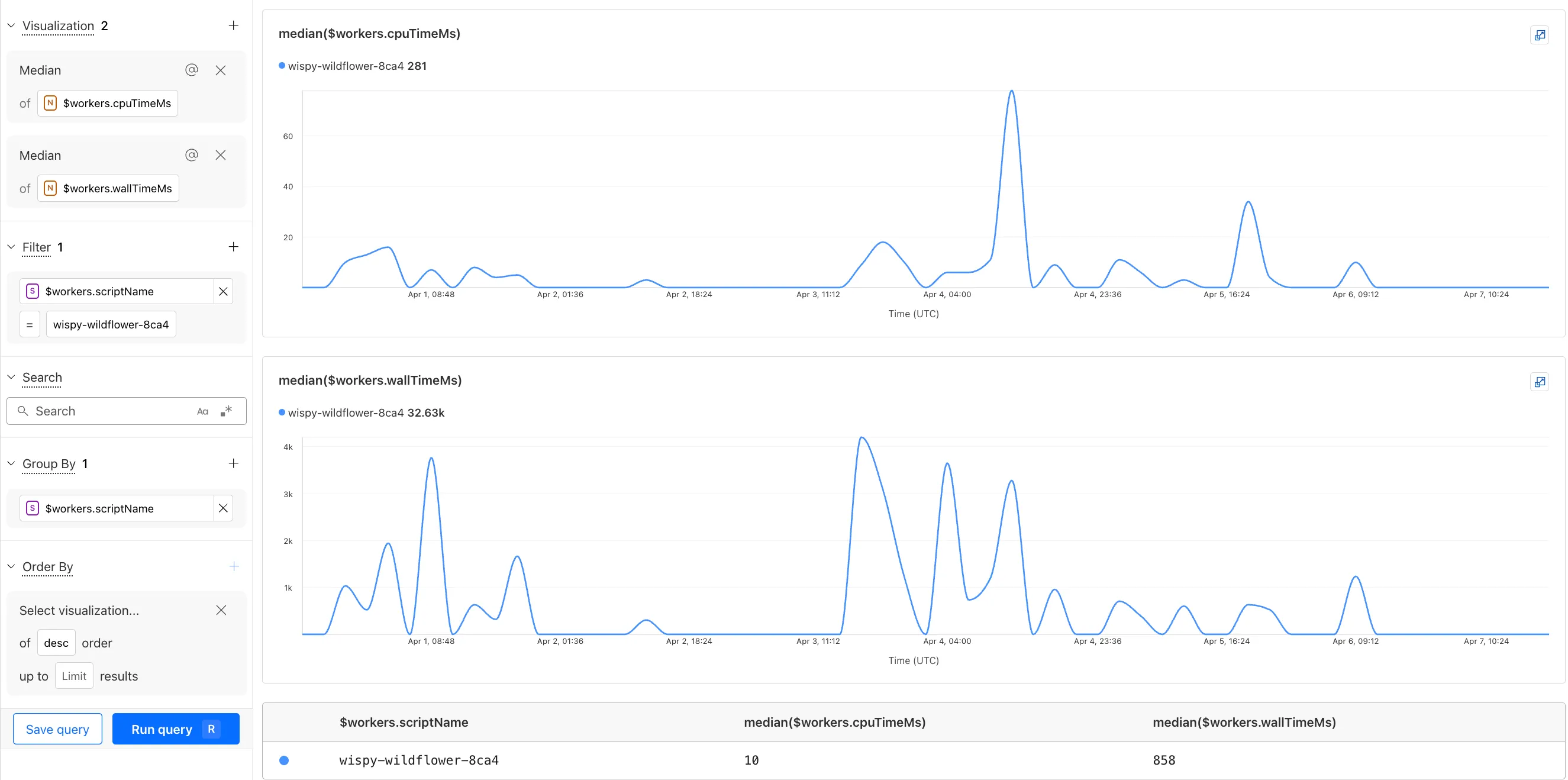

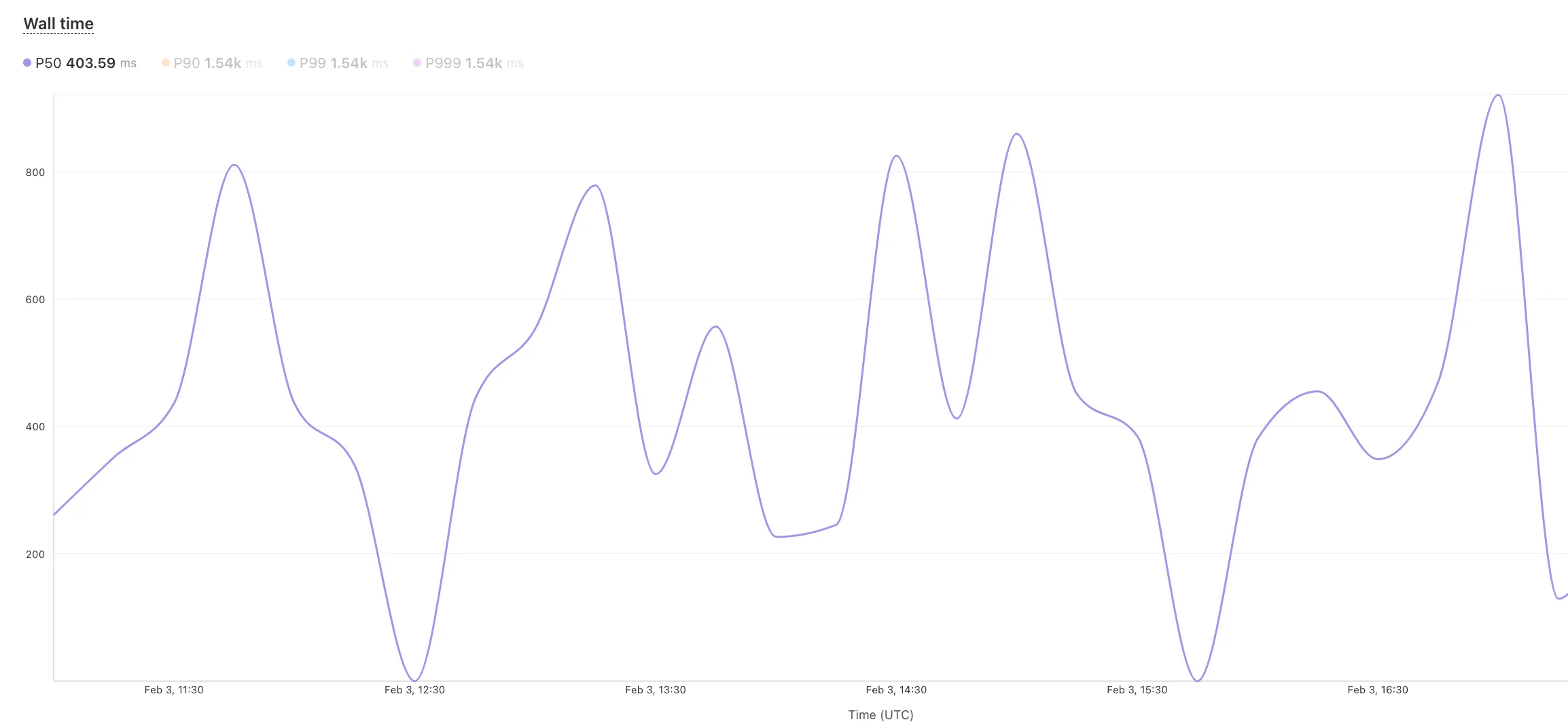

You can now observe and investigate the CPU time and Wall time for every Workers Invocations.

- For Workers Logs, CPU time and Wall time are surfaced in the Invocation Log..

- For Tail Workers, CPU time and Wall time are surfaced at the top level of the Workers Trace Events object.

- For Workers Logpush, CPU and Wall time are surfaced at the top level of the Workers Trace Events object. All new jobs will have these new fields included by default. Existing jobs need to be updated to include CPU time and Wall time.

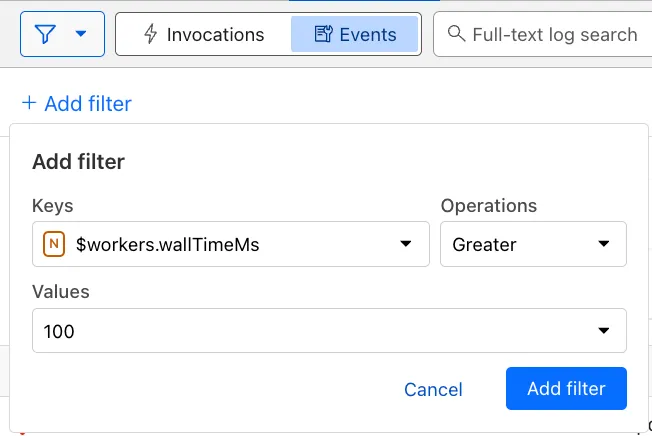

You can use a Workers Logs filter to search for logs where Wall time exceeds 100ms.

You can also use the Workers Observability Query Builder ↗ to find the median CPU time and median Wall time for all of your Workers.

-

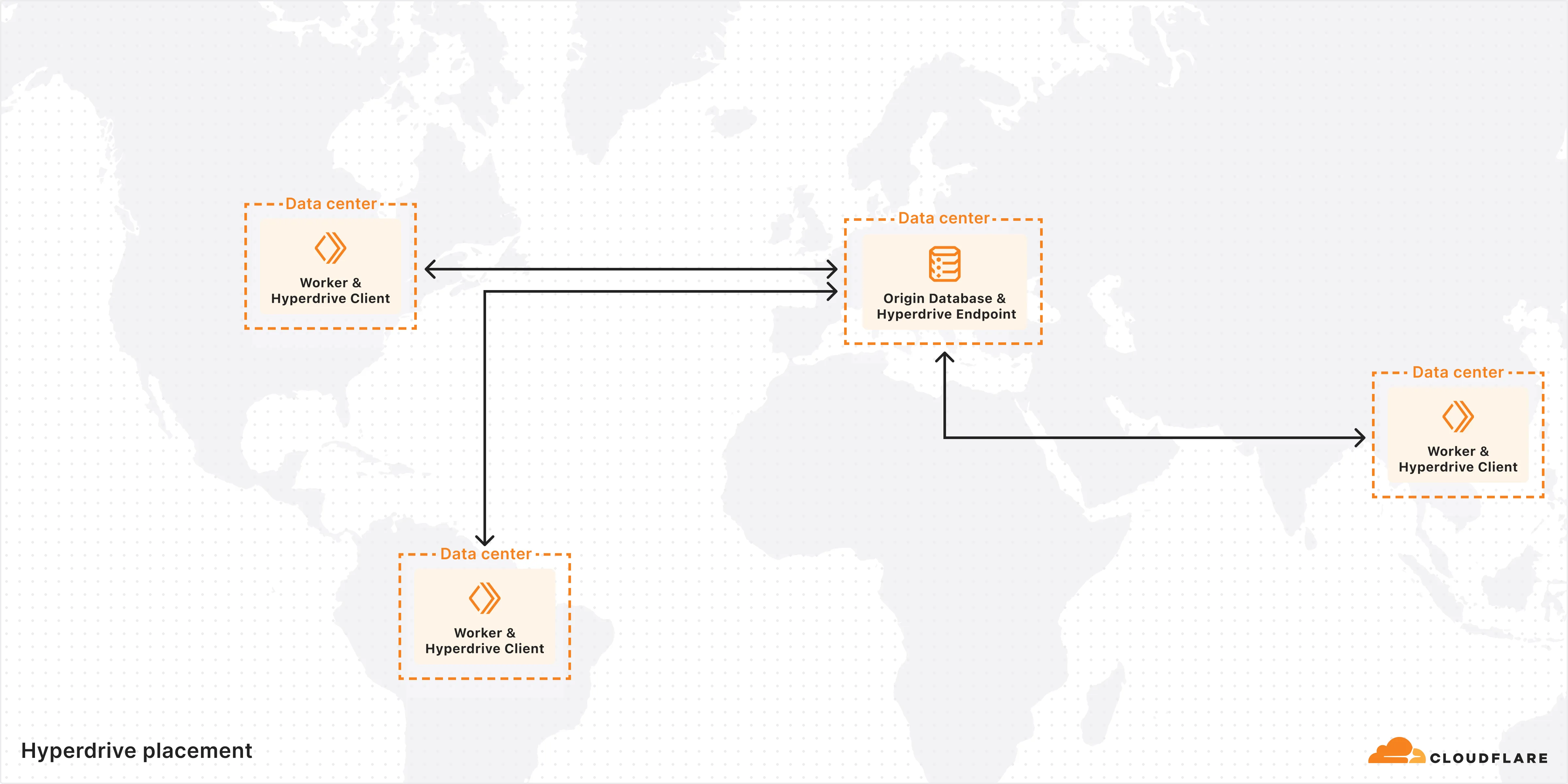

Hyperdrive is now available on the Free plan of Cloudflare Workers, enabling you to build Workers that connect to PostgreSQL or MySQL databases without compromise.

Low-latency access to SQL databases is critical to building full-stack Workers applications. We want you to be able to build on fast, global apps on Workers, regardless of the tools you use. So we made Hyperdrive available for all, to make it easier to build Workers that connect to PostgreSQL and MySQL.

If you want to learn more about how Hyperdrive works, read the deep dive ↗ on how Hyperdrive can make your database queries up to 4x faster.

Visit the docs to get started with Hyperdrive for PostgreSQL or MySQL.

-

The following full-stack frameworks now have Generally Available ("GA") adapters for Cloudflare Workers, and are ready for you to use in production:

The following frameworks are now in beta, with GA support coming very soon:

- Next.js, supported through @opennextjs/cloudflare ↗ is now

v1.0-beta. - Angular

- SolidJS (SolidStart)

You can also build complete full-stack apps on Workers without a framework:

- You can “just use Vite" ↗ and React together, and build a back-end API in the same Worker. Follow our React SPA with an API tutorial to learn how.

Get started building today with our framework guides, or read our Developer Week 2025 blog post ↗ about all the updates to building full-stack applications on Workers.

- Next.js, supported through @opennextjs/cloudflare ↗ is now

-

The Cloudflare Vite plugin has reached v1.0 ↗ and is now Generally Available ("GA").

When you use

@cloudflare/vite-plugin, you can use Vite's local development server and build tooling, while ensuring that while developing, your code runs inworkerd↗, the open-source Workers runtime.This lets you get the best of both worlds for a full-stack app — you can use Hot Module Replacement ↗ from Vite right alongside Durable Objects and other runtime APIs and bindings that are unique to Cloudflare Workers.

@cloudflare/vite-pluginis made possible by the new environment API ↗ in Vite, and was built in partnership with the Vite team ↗.You can build any type of application with

@cloudflare/vite-plugin, using any rendering mode, from single page applications (SPA) and static sites to server-side rendered (SSR) pages and API routes.React Router v7 (Remix) is the first full-stack framework to provide full support for Cloudflare Vite plugin, allowing you to use all parts of Cloudflare's developer platform, without additional build steps.

You can also build complete full-stack apps on Workers without a framework — "just use Vite" ↗ and React together, and build a back-end API in the same Worker. Follow our React SPA with an API tutorial to learn how.

If you're already using Vite ↗ in your build and development toolchain, you can start using our plugin with minimal changes to your

vite.config.ts:vite.config.ts import { defineConfig } from "vite";import { cloudflare } from "@cloudflare/vite-plugin";export default defineConfig({plugins: [cloudflare()],});Take a look at the documentation for our Cloudflare Vite plugin for more information!

-

Email Workers enables developers to programmatically take action on anything that hits their email inbox. If you're building with Email Workers, you can now test the behavior of an Email Worker script, receiving, replying and sending emails in your local environment using

wrangler dev.Below is an example that shows you how you can receive messages using the

email()handler and parse them using postal-mime ↗:import * as PostalMime from 'postal-mime';export default {async email(message, env, ctx) {const parser = new PostalMime.default();const rawEmail = new Response(message.raw);const email = await parser.parse(await rawEmail.arrayBuffer());console.log(email);},};Now when you run

npx wrangler dev, wrangler will expose a local/cdn-cgi/handler/emailendpoint that you canPOSTemail messages to and trigger your Worker'semail()handler:Terminal window curl -X POST 'http://localhost:8787/cdn-cgi/handler/email' \--url-query 'from=sender@example.com' \--url-query 'to=recipient@example.com' \--header 'Content-Type: application/json' \--data-raw 'Received: from smtp.example.com (127.0.0.1)by cloudflare-email.com (unknown) id 4fwwffRXOpyRfor <recipient@example.com>; Tue, 27 Aug 2024 15:50:20 +0000From: "John" <sender@example.com>Reply-To: sender@example.comTo: recipient@example.comSubject: Testing Email Workers Local DevContent-Type: text/html; charset="windows-1252"X-Mailer: CurlDate: Tue, 27 Aug 2024 08:49:44 -0700Message-ID: <6114391943504294873000@ZSH-GHOSTTY>Hi there'This is what you get in the console:

{headers: [{key: 'received',value: 'from smtp.example.com (127.0.0.1) by cloudflare-email.com (unknown) id 4fwwffRXOpyR for <recipient@example.com>; Tue, 27 Aug 2024 15:50:20 +0000'},{ key: 'from', value: '"John" <sender@example.com>' },{ key: 'reply-to', value: 'sender@example.com' },{ key: 'to', value: 'recipient@example.com' },{ key: 'subject', value: 'Testing Email Workers Local Dev' },{ key: 'content-type', value: 'text/html; charset="windows-1252"' },{ key: 'x-mailer', value: 'Curl' },{ key: 'date', value: 'Tue, 27 Aug 2024 08:49:44 -0700' },{key: 'message-id',value: '<6114391943504294873000@ZSH-GHOSTTY>'}],from: { address: 'sender@example.com', name: 'John' },to: [ { address: 'recipient@example.com', name: '' } ],replyTo: [ { address: 'sender@example.com', name: '' } ],subject: 'Testing Email Workers Local Dev',messageId: '<6114391943504294873000@ZSH-GHOSTTY>',date: '2024-08-27T15:49:44.000Z',html: 'Hi there\n',attachments: []}Local development is a critical part of the development flow, and also works for sending, replying and forwarding emails. See our documentation for more information.

-

Hyperdrive now supports connecting to MySQL and MySQL-compatible databases, including Amazon RDS and Aurora MySQL, Google Cloud SQL for MySQL, Azure Database for MySQL, PlanetScale and MariaDB.

Hyperdrive makes your regional, MySQL databases fast when connecting from Cloudflare Workers. It eliminates unnecessary network roundtrips during connection setup, pools database connections globally, and can cache query results to provide the fastest possible response times.

Best of all, you can connect using your existing drivers, ORMs, and query builders with Hyperdrive's secure credentials, no code changes required.

import { createConnection } from "mysql2/promise";export interface Env {HYPERDRIVE: Hyperdrive;}export default {async fetch(request, env, ctx): Promise<Response> {const connection = await createConnection({host: env.HYPERDRIVE.host,user: env.HYPERDRIVE.user,password: env.HYPERDRIVE.password,database: env.HYPERDRIVE.database,port: env.HYPERDRIVE.port,disableEval: true, // Required for Workers compatibility});const [results, fields] = await connection.query("SHOW tables;");ctx.waitUntil(connection.end());return new Response(JSON.stringify({ results, fields }), {headers: {"Content-Type": "application/json","Access-Control-Allow-Origin": "*",},});},} satisfies ExportedHandler<Env>;Learn more about how Hyperdrive works and get started building Workers that connect to MySQL with Hyperdrive.

-

When using a Worker with the

nodejs_compatcompatibility flag enabled, the following Node.js APIs are now available:This make it easier to reuse existing Node.js code in Workers or use npm packages that depend on these APIs.

The full

node:crypto↗ API is now available in Workers.You can use it to verify and sign data:

import { sign, verify } from "node:crypto";const signature = sign("sha256", "-data to sign-", env.PRIVATE_KEY);const verified = verify("sha256", "-data to sign-", env.PUBLIC_KEY, signature);Or, to encrypt and decrypt data:

import { publicEncrypt, privateDecrypt } from "node:crypto";const encrypted = publicEncrypt(env.PUBLIC_KEY, "some data");const plaintext = privateDecrypt(env.PRIVATE_KEY, encrypted);See the

node:cryptodocumentation for more information.The following APIs from

node:tlsare now available:This enables secure connections over TLS (Transport Layer Security) to external services.

import { connect } from "node:tls";// ... in a request handler ...const connectionOptions = { key: env.KEY, cert: env.CERT };const socket = connect(url, connectionOptions, () => {if (socket.authorized) {console.log("Connection authorized");}});socket.on("data", (data) => {console.log(data);});socket.on("end", () => {console.log("server ends connection");});See the

node:tlsdocumentation for more information.

-

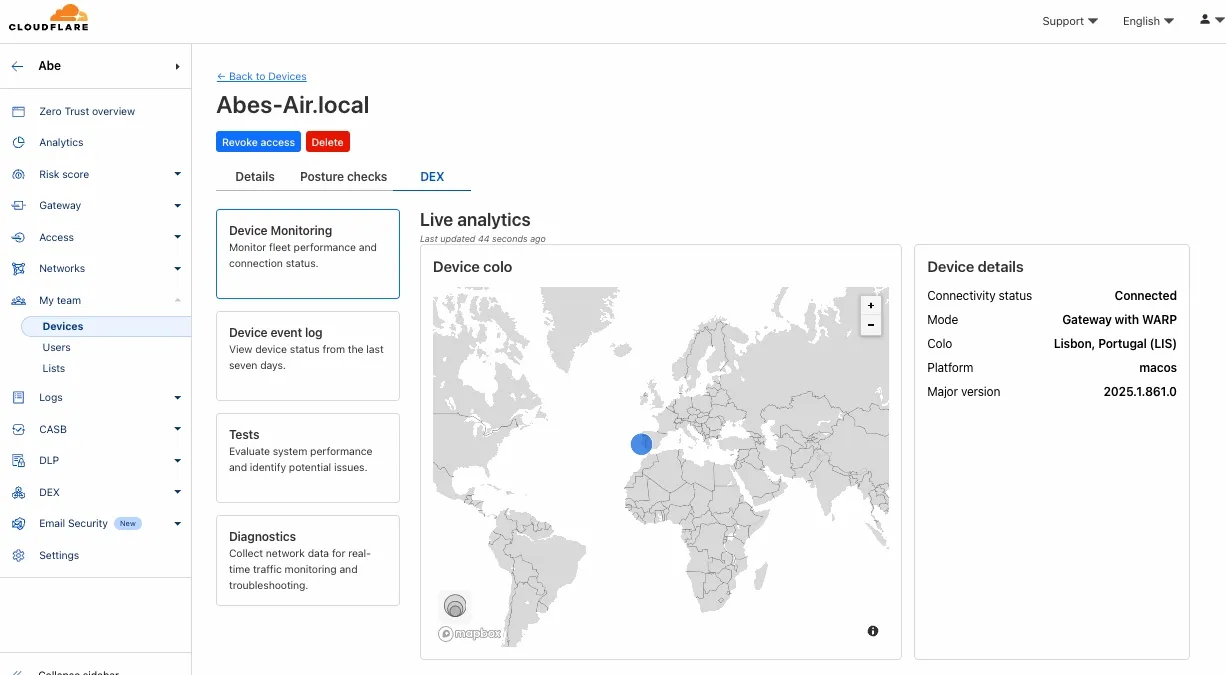

A new GA release for the macOS WARP client is now available on the Stable release downloads page. This release contains a hotfix for captive portal detection and PF state tables for the 2025.2.600.0 release.

Changes and improvements

- Fix to reduce the number of browser tabs opened during captive portal logins.

- Improvement to exclude local DNS traffic entries from PF state table to reduce risk of connectivity issues from exceeding table capacity.

Known issues

- macOS Sequoia: Due to changes Apple introduced in macOS 15.0.x, the WARP client may not behave as expected. Cloudflare recommends the use of macOS 15.4 or later.

-

A new GA release for the Windows WARP client is now available on the Stable release downloads page. This release contains a hotfix for captive portal detection for the 2025.2.600.0 release.

Changes and improvements

- Fix to reduce the number of browser tabs opened during captive portal logins.

Known issues

-

DNS resolution may be broken when the following conditions are all true:

- WARP is in Secure Web Gateway without DNS filtering (tunnel-only) mode.

- A custom DNS server address is configured on the primary network adapter.

- The custom DNS server address on the primary network adapter is changed while WARP is connected.

To work around this issue, reconnect the WARP client by toggling off and back on.

-

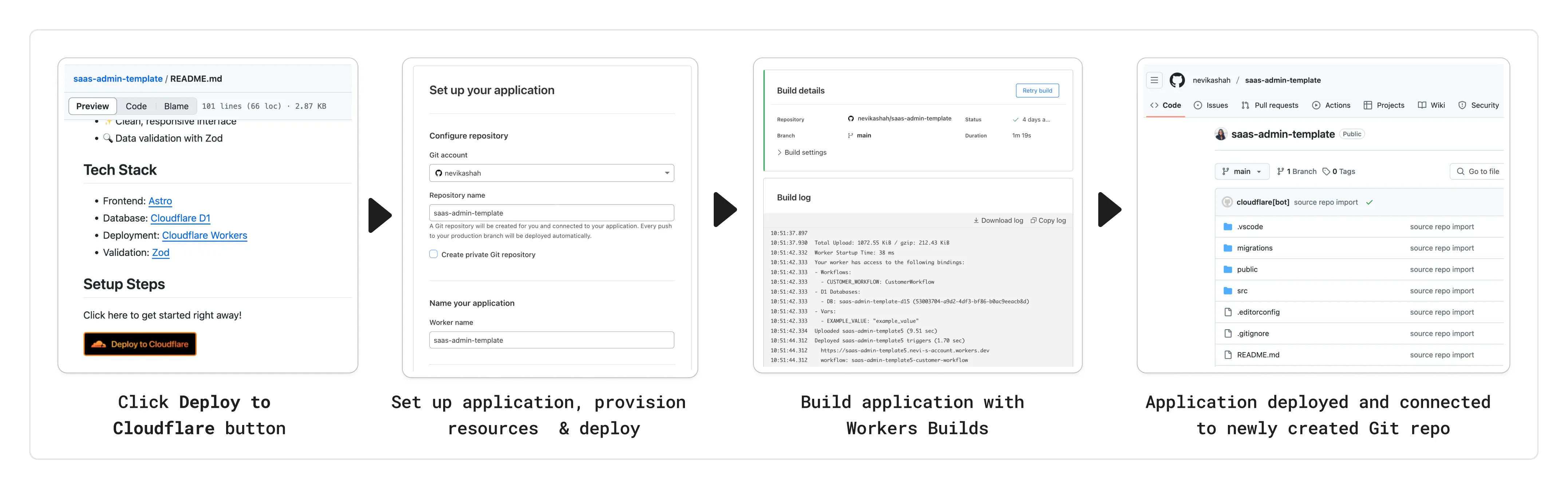

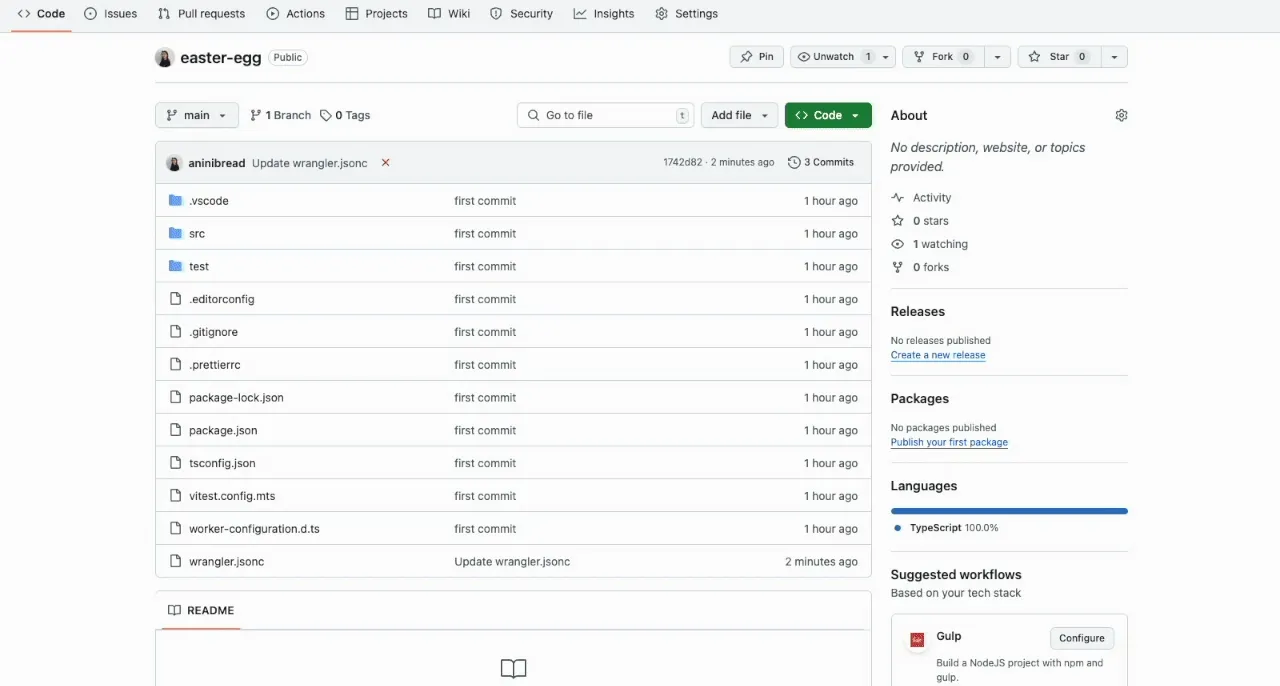

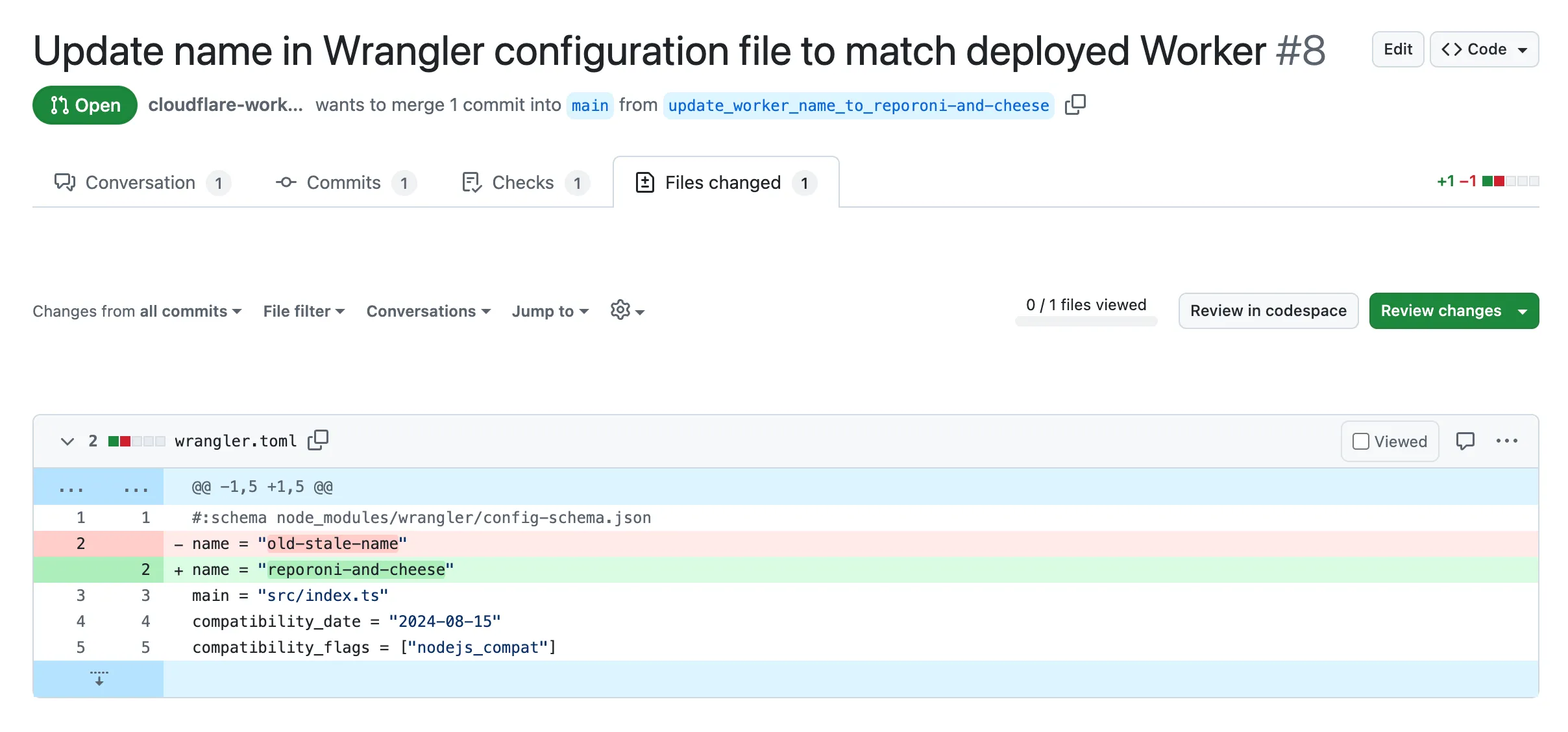

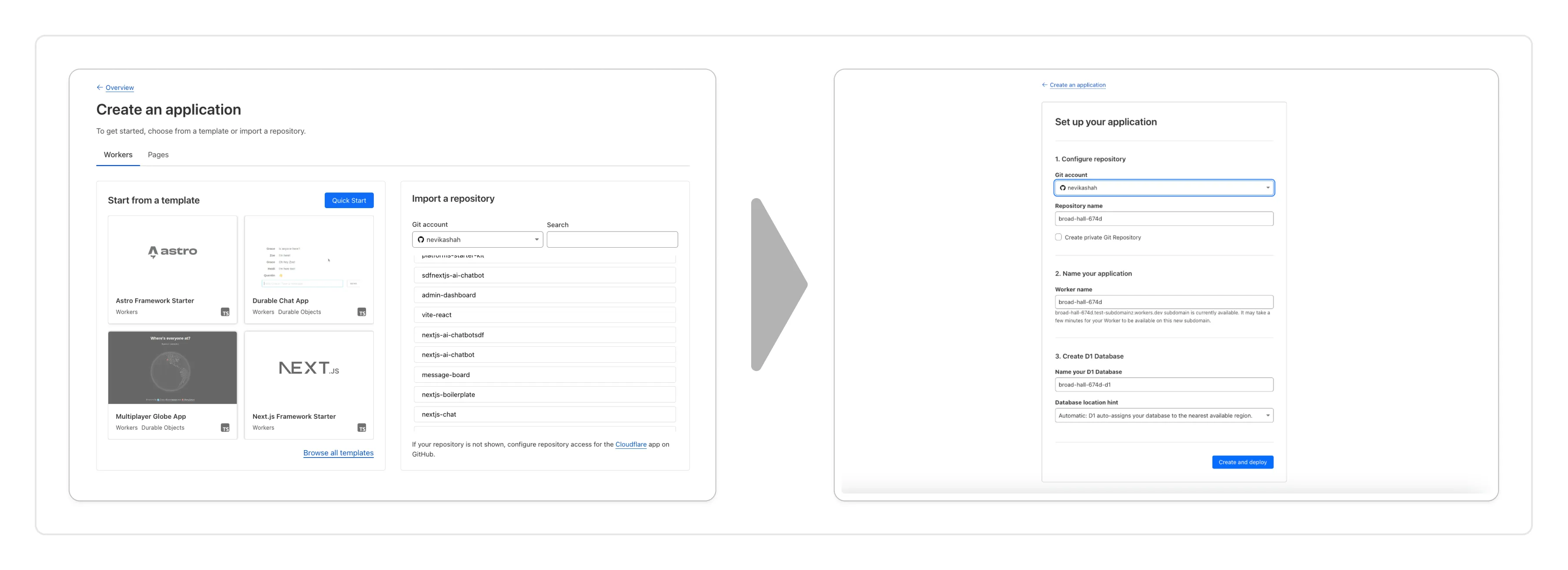

You can now add a Deploy to Cloudflare button to the README of your Git repository containing a Workers application — making it simple for other developers to quickly set up and deploy your project!

The Deploy to Cloudflare button:

- Creates a new Git repository on your GitHub/ GitLab account: Cloudflare will automatically clone and create a new repository on your account, so you can continue developing.

- Automatically provisions resources the app needs: If your repository requires Cloudflare primitives like a Workers KV namespace, a D1 database, or an R2 bucket, Cloudflare will automatically provision them on your account and bind them to your Worker upon deployment.

- Configures Workers Builds (CI/CD): Every new push to your production branch on your newly created repository will automatically build and deploy courtesy of Workers Builds.

- Adds preview URLs to each pull request: If you'd like to test your changes before deploying, you can push changes to a non-production branch and preview URLs will be generated and posted back to GitHub as a comment.

To create a Deploy to Cloudflare button in your README, you can add the following snippet, including your Git repository URL:

[](https://deploy.workers.cloudflare.com/?url=<YOUR_GIT_REPO_URL>)Check out our documentation for more information on how to set up a deploy button for your application and best practices to ensure a successful deployment for other developers.

-

The Agents SDK now includes built-in support for building remote MCP (Model Context Protocol) servers directly as part of your Agent. This allows you to easily create and manage MCP servers, without the need for additional infrastructure or configuration.

The SDK includes a new

MCPAgentclass that extends theAgentclass and allows you to expose resources and tools over the MCP protocol, as well as authorization and authentication to enable remote MCP servers.export class MyMCP extends McpAgent {server = new McpServer({name: "Demo",version: "1.0.0",});async init() {this.server.resource(`counter`, `mcp://resource/counter`, (uri) => {// ...});this.server.tool("add","Add two numbers together",{ a: z.number(), b: z.number() },async ({ a, b }) => {// ...},);}}export class MyMCP extends McpAgent<Env> {server = new McpServer({name: "Demo",version: "1.0.0",});async init() {this.server.resource(`counter`, `mcp://resource/counter`, (uri) => {// ...});this.server.tool("add","Add two numbers together",{ a: z.number(), b: z.number() },async ({ a, b }) => {// ...},);}}See the example ↗ for the full code and as the basis for building your own MCP servers, and the client example ↗ for how to build an Agent that acts as an MCP client.

To learn more, review the announcement blog ↗ as part of Developer Week 2025.

We've made a number of improvements to the Agents SDK, including:

- Support for building MCP servers with the new

MCPAgentclass. - The ability to export the current agent, request and WebSocket connection context using

import { context } from "agents", allowing you to minimize or avoid direct dependency injection when calling tools. - Fixed a bug that prevented query parameters from being sent to the Agent server from the

useAgentReact hook. - Automatically converting the

agentname inuseAgentoruseAgentChatto kebab-case to ensure it matches the naming convention expected byrouteAgentRequest.

To install or update the Agents SDK, run

npm i agents@latestin an existing project, or explore theagents-starterproject:Terminal window npm create cloudflare@latest -- --template cloudflare/agents-starterSee the full release notes and changelog on the Agents SDK repository ↗ and

- Support for building MCP servers with the new

-

Workflows is now Generally Available (or "GA"): in short, it's ready for production workloads. Alongside marking Workflows as GA, we've introduced a number of changes during the beta period, including:

- A new

waitForEventAPI that allows a Workflow to wait for an event to occur before continuing execution. - Increased concurrency: you can run up to 4,500 Workflow instances concurrently — and this will continue to grow.

- Improved observability, including new CPU time metrics that allow you to better understand which Workflow instances are consuming the most resources and/or contributing to your bill.

- Support for

vitestfor testing Workflows locally and in CI/CD pipelines.

Workflows also supports the new increased CPU limits that apply to Workers, allowing you to run more CPU-intensive tasks (up to 5 minutes of CPU time per instance), not including the time spent waiting on network calls, AI models, or other I/O bound tasks.

The new

step.waitForEventAPI allows a Workflow instance to wait on events and data, enabling human-in-the-the-loop interactions, such as approving or rejecting a request, directly handling webhooks from other systems, or pushing event data to a Workflow while it's running.Because Workflows are just code, you can conditionally execute code based on the result of a

waitForEventcall, and/or callwaitForEventmultiple times in a single Workflow based on what the Workflow needs.For example, if you wanted to implement a human-in-the-loop approval process, you could use

waitForEventto wait for a user to approve or reject a request, and then conditionally execute code based on the result.import { Workflow, WorkflowEvent } from "cloudflare:workflows";export class MyWorkflow extends WorkflowEntrypoint {async run(event, step) {// Other steps in your Workflowlet event = await step.waitForEvent("receive invoice paid webhook from Stripe",{ type: "stripe-webhook", timeout: "1 hour" },);// Rest of your Workflow}}import { Workflow, WorkflowEvent } from "cloudflare:workflows";export class MyWorkflow extends WorkflowEntrypoint<Env, Params> {async run(event: WorkflowEvent<Params>, step: WorkflowStep) {// Other steps in your Workflowlet event = await step.waitForEvent<IncomingStripeWebhook>("receive invoice paid webhook from Stripe", { type: "stripe-webhook", timeout: "1 hour" })// Rest of your Workflow}}You can then send a Workflow an event from an external service via HTTP or from within a Worker using the Workers API for Workflows:

export default {async fetch(req, env) {const instanceId = new URL(req.url).searchParams.get("instanceId");const webhookPayload = await req.json();let instance = await env.MY_WORKFLOW.get(instanceId);// Send our event, with `type` matching the event type defined in// our step.waitForEvent callawait instance.sendEvent({type: "stripe-webhook",payload: webhookPayload,});return Response.json({status: await instance.status(),});},};export default {async fetch(req: Request, env: Env) {const instanceId = new URL(req.url).searchParams.get("instanceId")const webhookPayload = await req.json<Payload>()let instance = await env.MY_WORKFLOW.get(instanceId);// Send our event, with `type` matching the event type defined in// our step.waitForEvent callawait instance.sendEvent({type: "stripe-webhook", payload: webhookPayload})return Response.json({status: await instance.status(),});},};Read the GA announcement blog ↗ to learn more about what landed as part of the Workflows GA.

- A new

-

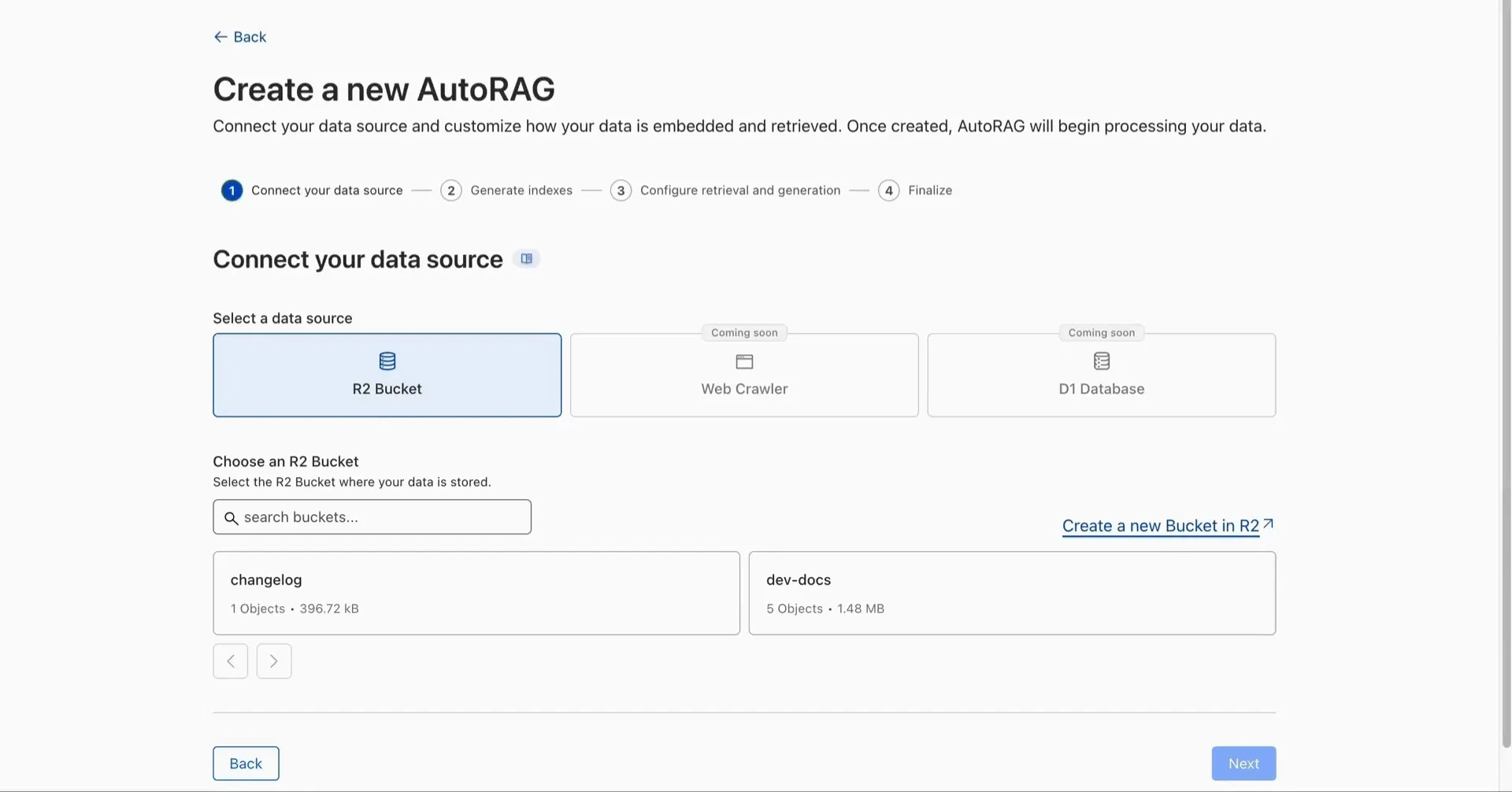

AutoRAG is now in open beta, making it easy for you to build fully-managed retrieval-augmented generation (RAG) pipelines without managing infrastructure. Just upload your docs to R2, and AutoRAG handles the rest: embeddings, indexing, retrieval, and response generation via API.

With AutoRAG, you can:

- Customize your pipeline: Choose from Workers AI models, configure chunking strategies, edit system prompts, and more.

- Instant setup: AutoRAG provisions everything you need from Vectorize, AI gateway, to pipeline logic for you, so you can go from zero to a working RAG pipeline in seconds.

- Keep your index fresh: AutoRAG continuously syncs your index with your data source to ensure responses stay accurate and up to date.

- Ask questions: Query your data and receive grounded responses via a Workers binding or API.

Whether you're building internal tools, AI-powered search, or a support assistant, AutoRAG gets you from idea to deployment in minutes.

Get started in the Cloudflare dashboard ↗ or check out the guide for instructions on how to build your RAG pipeline today.

-

We’re excited to announce Browser Rendering is now available on the Workers Free plan ↗, making it even easier to prototype and experiment with web search and headless browser use-cases when building applications on Workers.

The Browser Rendering REST API is now Generally Available, allowing you to control browser instances from outside of Workers applications. We've added three new endpoints to help automate more browser tasks:

- Extract structured data – Use

/jsonto retrieve structured data from a webpage. - Retrieve links – Use

/linksto pull all links from a webpage. - Convert to Markdown – Use

/markdownto convert webpage content into Markdown format.

For example, to fetch the Markdown representation of a webpage:

Markdown example curl -X 'POST' 'https://api.cloudflare.com/client/v4/accounts/<accountId>/browser-rendering/markdown' \-H 'Content-Type: application/json' \-H 'Authorization: Bearer <apiToken>' \-d '{"url": "https://example.com"}'For the full list of endpoints, check out our REST API documentation. You can also interact with Browser Rendering via the Cloudflare TypeScript SDK ↗.

We also recently landed support for Playwright in Browser Rendering for browser automation from Cloudflare Workers, in addition to Puppeteer, giving you more flexibility to test across different browser environments.

Visit the Browser Rendering docs to learn more about how to use headless browsers in your applications.

- Extract structured data – Use

-

SQLite in Durable Objects is now generally available (GA) with 10GB SQLite database per Durable Object. Since the public beta ↗ in September 2024, we've added feature parity and robustness for the SQLite storage backend compared to the preexisting key-value (KV) storage backend for Durable Objects.

SQLite-backed Durable Objects are recommended for all new Durable Object classes, using

new_sqlite_classesWrangler configuration. Only SQLite-backed Durable Objects have access to Storage API's SQL and point-in-time recovery methods, which provide relational data modeling, SQL querying, and better data management.export class MyDurableObject extends DurableObject {sql: SqlStorageconstructor(ctx: DurableObjectState, env: Env) {super(ctx, env);this.sql = ctx.storage.sql;}async sayHello() {let result = this.sql.exec("SELECT 'Hello, World!' AS greeting").one();return result.greeting;}}KV-backed Durable Objects remain for backwards compatibility, and a migration path from key-value storage to SQL storage for existing Durable Object classes will be offered in the future.

For more details on SQLite storage, checkout Zero-latency SQLite storage in every Durable Object blog ↗.

-

Durable Objects can now be used with zero commitment on the Workers Free plan allowing you to build AI agents with Agents SDK, collaboration tools, and real-time applications like chat or multiplayer games.

Durable Objects let you build stateful, serverless applications with millions of tiny coordination instances that run your application code alongside (in the same thread!) your durable storage. Each Durable Object can access its own SQLite database through a Storage API. A Durable Object class is defined in a Worker script encapsulating the Durable Object's behavior when accessed from a Worker. To try the code below, click the button:

import { DurableObject } from "cloudflare:workers";// Durable Objectexport class MyDurableObject extends DurableObject {...async sayHello(name) {return `Hello, ${name}!`;}}// Workerexport default {async fetch(request, env) {// Every unique ID refers to an individual instance of the Durable Object classconst id = env.MY_DURABLE_OBJECT.idFromName("foo");// A stub is a client used to invoke methods on the Durable Objectconst stub = env.MY_DURABLE_OBJECT.get(id);// Methods on the Durable Object are invoked via the stubconst response = await stub.sayHello("world");return response;},};Free plan limits apply to Durable Objects compute and storage usage. Limits allow developers to build real-world applications, with every Worker request able to call a Durable Object on the free plan.

For more information, checkout:

-

You can now capture a maximum of 256 KB of log events per Workers invocation, helping you gain better visibility into application behavior.

All console.log() statements, exceptions, request metadata, and headers are automatically captured during the Worker invocation and emitted as JSON object. Workers Logs deserializes this object before indexing the fields and storing them. You can also capture, transform, and export the JSON object in a Tail Worker.

256 KB is a 2x increase from the previous 128 KB limit. After you exceed this limit, further context associated with the request will not be recorded in your logs.

This limit is automatically applied to all Workers.

-

We're excited to share that you can now use Playwright's browser automation capabilities ↗ from Cloudflare Workers.

Playwright ↗ is an open-source package developed by Microsoft that can do browser automation tasks; it's commonly used to write software tests, debug applications, create screenshots, and crawl pages. Like Puppeteer, we forked ↗ Playwright and modified it to be compatible with Cloudflare Workers and Browser Rendering ↗.

Below is an example of how to use Playwright with Browser Rendering to test a TODO application using assertions:

Assertion example import { launch, type BrowserWorker } from '@cloudflare/playwright';import { expect } from '@cloudflare/playwright/test';interface Env {MYBROWSER: BrowserWorker;}export default {async fetch(request: Request, env: Env) {const browser = await launch(env.MYBROWSER);const page = await browser.newPage();await page.goto('https://demo.playwright.dev/todomvc');const TODO_ITEMS = ['buy some cheese','feed the cat','book a doctors appointment'];const newTodo = page.getByPlaceholder('What needs to be done?');for (const item of TODO_ITEMS) {await newTodo.fill(item);await newTodo.press('Enter');}await expect(page.getByTestId('todo-title')).toHaveCount(TODO_ITEMS.length);await Promise.all(TODO_ITEMS.map((value, index) => expect(page.getByTestId('todo-title').nth(index)).toHaveText(value)));},};Playwright is available as an npm package at

@cloudflare/playwright↗ and the code is at GitHub ↗.Learn more in our documentation.

-

You can now access all Cloudflare cache purge methods — no matter which plan you’re on. Whether you need to update a single asset or instantly invalidate large portions of your site’s content, you now have the same powerful tools previously reserved for Enterprise customers.

Anyone on Cloudflare can now:

- Purge Everything: Clears all cached content associated with a website.

- Purge by Prefix: Targets URLs sharing a common prefix.

- Purge by Hostname: Invalidates content by specific hostnames.

- Purge by URL (single-file purge): Precisely targets individual URLs.

- Purge by Tag: Uses Cache-Tag response headers to invalidate grouped assets, offering flexibility for complex cache management scenarios.

Want to learn how each purge method works, when to use them, or what limits apply to your plan? Dive into our purge cache documentation and API reference ↗ for all the details.

-

A new GA release for the Linux WARP client is now available on the Stable release downloads page. This release contains support for a new WARP setting, Global WARP override. It also includes significant improvements to our captive portal / public Wi-Fi detection logic.

Changes and improvements

- Improved captive portal detection to make more public networks compatible and have faster detection.

- WARP tunnel protocol details can now be viewed using the

warp-cli tunnel statscommand. - Fixed an issue with device revocation and re-registration when switching configurations.

- Added a new Global WARP override setting. This setting puts account administrators in control of disabling and enabling WARP across all devices registered to an account from the dashboard. Global WARP override is disabled by default.

-

A new GA release for the macOS WARP client is now available on the Stable release downloads page. This release contains support for a new WARP setting, Global WARP override. It also includes significant improvements to our captive portal / public Wi-Fi detection logic.

Changes and improvements

- Improved captive portal detection to make more public networks compatible and have faster detection.

- Improved error messages shown in the app.

- WARP tunnel protocol details can now be viewed using the

warp-cli tunnel statscommand. - Fixed an issue with device revocation and re-registration when switching configurations.

- Added a new Global WARP override setting. This setting puts account administrators in control of disabling and enabling WARP across all devices registered to an account from the dashboard. Global WARP override is disabled by default.

Known issues

- macOS Sequoia: Due to changes Apple introduced in macOS 15.0.x, the WARP client may not behave as expected. Cloudflare recommends the use of macOS 15.3 or later.

-

A new GA release for the Windows WARP client is now available on the Stable release downloads page. This release contains support for a new WARP setting, Global WARP override. It also includes significant improvements to our captive portal / public Wi-Fi detection logic.

Changes and improvements

- Improved captive portal detection to make more public networks compatible and have faster detection.

- Improved error messages shown in the app.

- Added the ability to control if the WARP interface IPs are registered with DNS servers or not.

- Removed DNS logs view from the Windows client GUI. DNS logs can be viewed as part of

warp-diagor by viewing the log file on the user's local directory. - Fixed an issue that would result in a user receiving multiple re-authentication requests when waking their device from sleep.

- WARP tunnel protocol details can now be viewed using the

warp-cli tunnel statscommand. - Improvements to Windows multi-user including support for fast user switching. If you are interested in testing this feature, reach out to your Cloudflare account team.

- Fixed an issue with device revocation and re-registration when switching configurations.

- Fixed an issue where DEX tests would run during certain sleep states where the networking stack was not fully up. This would result in failures that would be ignored.

- Added a new Global WARP override setting. This setting puts account administrators in control of disabling and enabling WARP across all devices registered to an account from the dashboard. Global WARP override is disabled by default.

Known issues

-

DNS resolution may be broken when the following conditions are all true:

- WARP is in Secure Web Gateway without DNS filtering (tunnel-only) mode.

- A custom DNS server address is configured on the primary network adapter.

- The custom DNS server address on the primary network adapter is changed while WARP is connected.

To work around this issue, reconnect the WARP client by toggling off and back on.

-

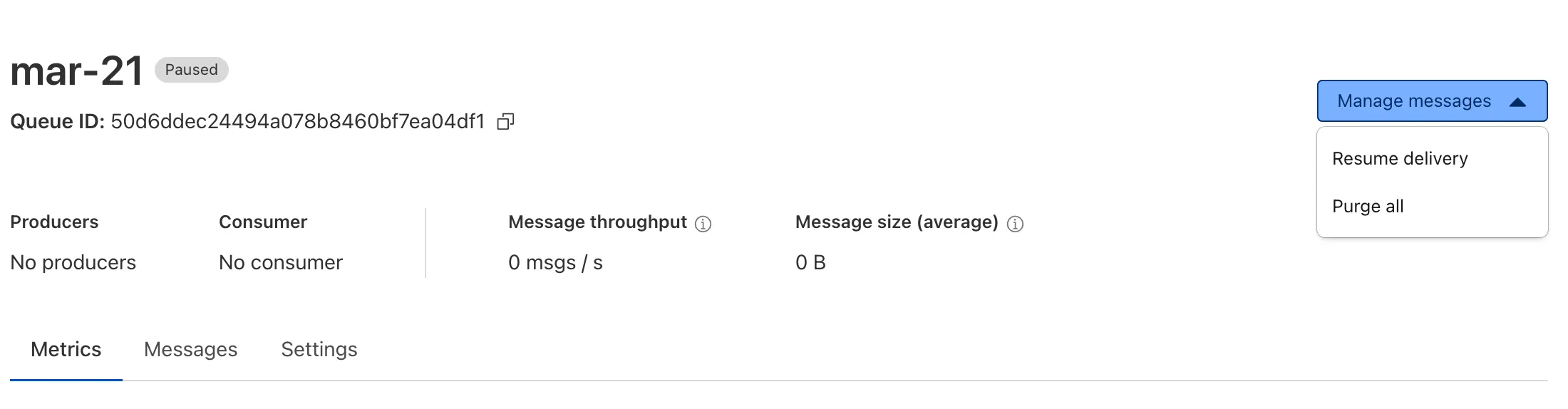

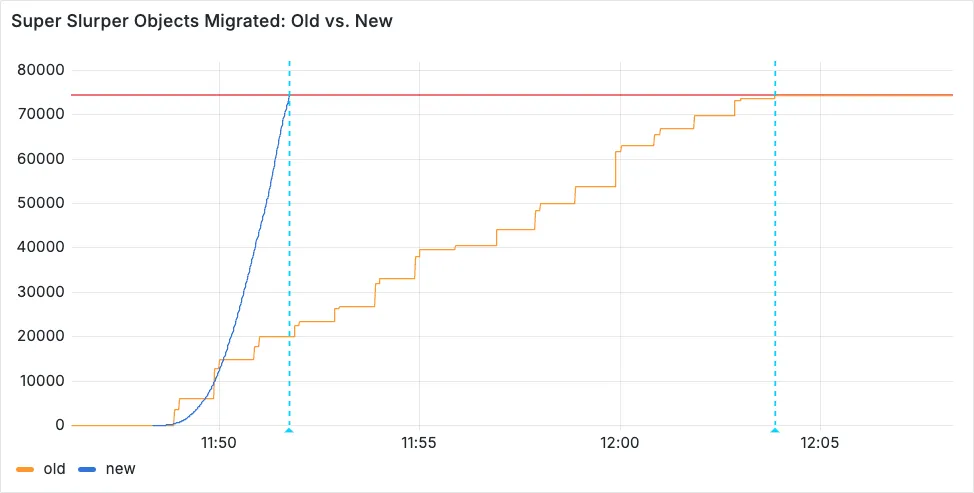

Queues now supports the ability to pause message delivery and/or purge (delete) messages on a queue. These operations can be useful when:

- Your consumer has a bug or downtime, and you want to temporarily stop messages from being processed while you fix the bug

- You have pushed invalid messages to a queue due to a code change during development, and you want to clean up the backlog

- Your queue has a backlog that is stale and you want to clean it up to allow new messages to be consumed

To pause a queue using Wrangler, run the

pause-deliverycommand. Paused queues continue to receive messages. And you can easily unpause a queue using theresume-deliverycommand.Pause and resume a queue $ wrangler queues pause-delivery my-queuePausing message delivery for queue my-queue.Paused message delivery for queue my-queue.$ wrangler queues resume-delivery my-queueResuming message delivery for queue my-queue.Resumed message delivery for queue my-queue.Purging a queue permanently deletes all messages in the queue. Unlike pausing, purging is an irreversible operation:

Purge a queue $ wrangler queues purge my-queue✔ This operation will permanently delete all the messages in queue my-queue. Type my-queue to proceed. … my-queuePurged queue 'my-queue'You can also do these operations using the Queues REST API, or the dashboard page for a queue.

This feature is available on all new and existing queues. Head over to the pause and purge documentation to learn more. And if you haven't used Cloudflare Queues before, get started with the Cloudflare Queues guide.

-

The latest version of audit logs streamlines audit logging by automatically capturing all user and system actions performed through the Cloudflare Dashboard or public APIs. This update leverages Cloudflare’s existing API Shield to generate audit logs based on OpenAPI schemas, ensuring a more consistent and automated logging process.

Availability: Audit logs (version 2) is now in Beta, with support limited to API access.

Use the following API endpoint to retrieve audit logs:

GET https://api.cloudflare.com/client/v4/accounts/<account_id>/logs/audit?since=<date>&before=<date>You can access detailed documentation for audit logs (version 2) Beta API release here ↗.

Key Improvements in the Beta Release:

-

Automated & standardized logging: Logs are now generated automatically using a standardized system, replacing manual, team-dependent logging. This ensures consistency across all Cloudflare services.

-

Expanded product coverage: Increased audit log coverage from 75% to 95%. Key API endpoints such as

/accounts,/zones, and/organizationsare now included. -

Granular filtering: Logs now follow a uniform format, enabling precise filtering by actions, users, methods, and resources—allowing for faster and more efficient investigations.

-

Enhanced context and traceability: Each log entry now includes detailed context, such as the authentication method used, the interface (API or Dashboard) through which the action was performed, and mappings to Cloudflare Ray IDs for better traceability.

-

Comprehensive activity capture: Expanded logging to include GET requests and failed attempts, ensuring that all critical activities are recorded.

Known Limitations in Beta

- Error handling for the API is not implemented.

- There may be gaps or missing entries in the available audit logs.

- UI is unavailable in this Beta release.

- System-level logs and User-Activity logs are not included.

Support for these features is coming as part of the GA release later this year. For more details, including a sample audit log, check out our blog post: Introducing Automatic Audit Logs ↗

-

-

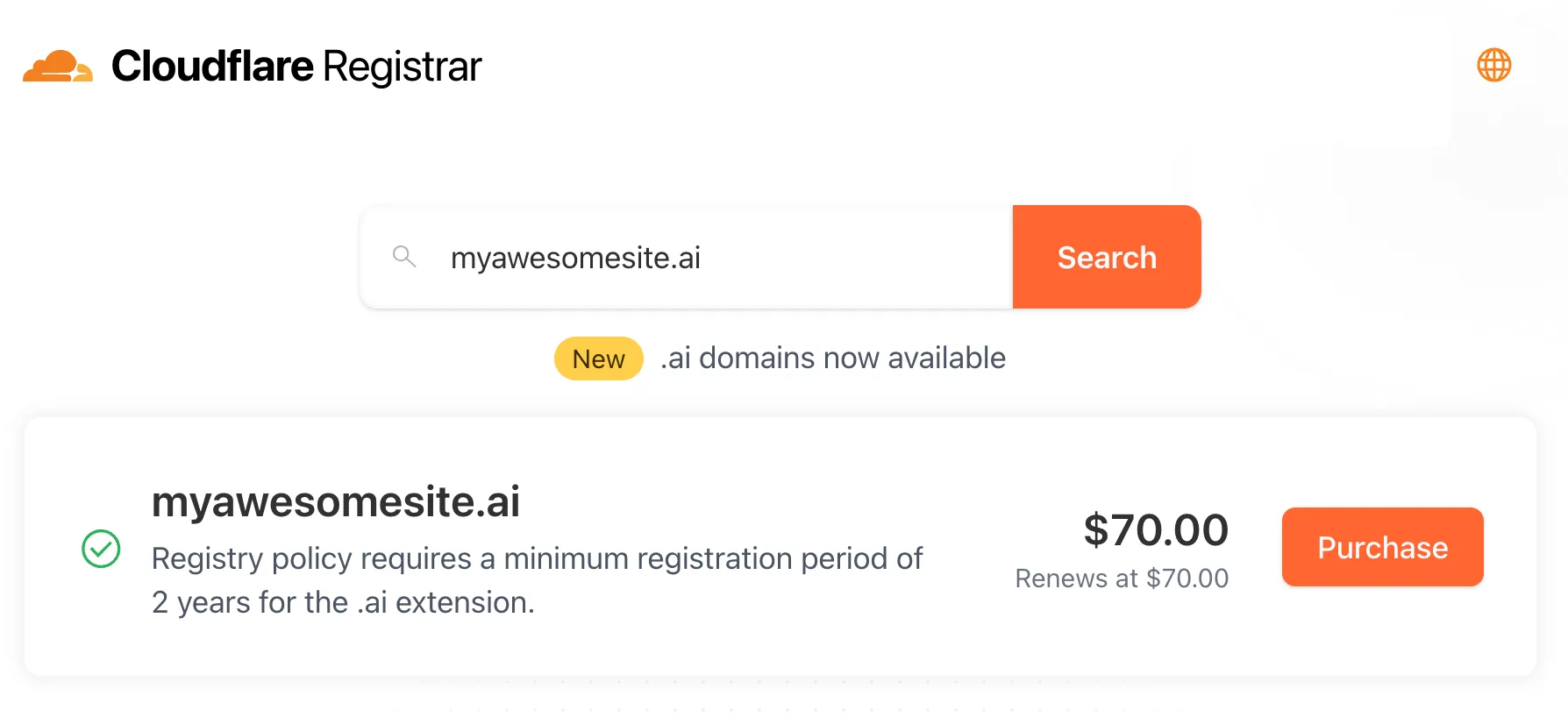

Cloudflare Registrar now supports

.aiand.shopdomains. These are two of our most highly-requested top-level domains (TLDs) and are great additions to the 300+ other TLDs we support ↗.Starting today, customers can:

- Register and renew these domains at cost without any markups or add-on fees

- Enjoy best-in-class security and performance with native integrations with Cloudflare DNS, CDN, and SSL services like one-click DNSSEC

- Combat domain hijacking with Custom Domain Protection ↗ (available on enterprise plans)

We can't wait to see what AI and e-commerce projects you deploy on Cloudflare. To get started, transfer your domains to Cloudflare or search for new ones to register ↗.

-

You can now run a Worker for up to 5 minutes of CPU time for each request.

Previously, each Workers request ran for a maximum of 30 seconds of CPU time — that is the time that a Worker is actually performing a task (we still allowed unlimited wall-clock time, in case you were waiting on slow resources). This meant that some compute-intensive tasks were impossible to do with a Worker. For instance, you might want to take the cryptographic hash of a large file from R2. If this computation ran for over 30 seconds, the Worker request would have timed out.

By default, Workers are still limited to 30 seconds of CPU time. This protects developers from incurring accidental cost due to buggy code.

By changing the

cpu_msvalue in your Wrangler configuration, you can opt in to any value up to 300,000 (5 minutes).{// ...rest of your configuration..."limits": {"cpu_ms": 300000,},// ...rest of your configuration...}[limits]cpu_ms = 300_000For more information on the updates limits, see the documentation on Wrangler configuration for

cpu_msand on Workers CPU time limits.For building long-running tasks on Cloudflare, we also recommend checking out Workflows and Queues.

-

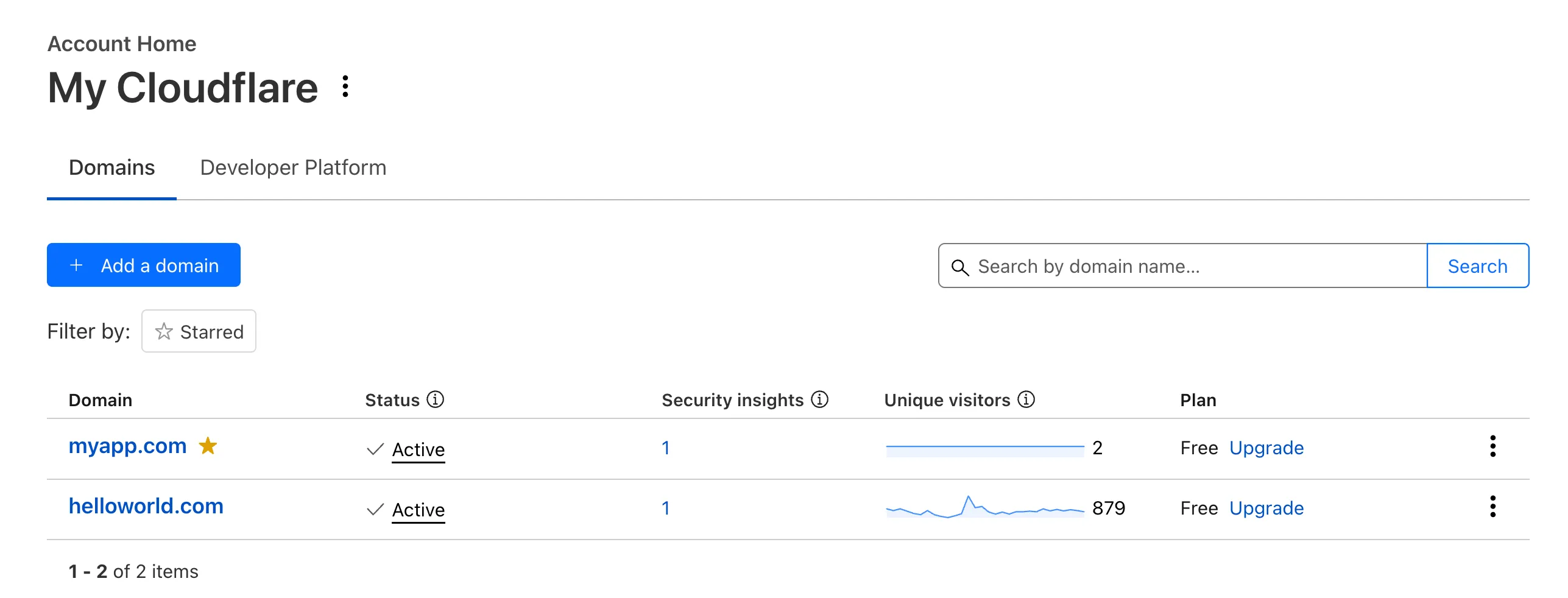

Recently, Account Home has been updated to streamline your workflows:

-

Recent Workers projects: You'll now find your projects readily accessible from a new

Developer Platformtab on Account Home. See recently-modified projects and explore what you can work our developer-focused products. -

Traffic and security insights: Get a snapshot of domain performance at a glance with key metrics and trends.

-

Quick actions: You can now perform common actions for your account, domains, and even Workers in just 1-2 clicks from the 3-dot menu.

-

Keep starred domains front and center: Now, when you filter for starred domains on Account Home, we'll save your preference so you'll continue to only see starred domains by default.

We can't wait for you to take the new Account Home for a spin.

For more info:

-

-

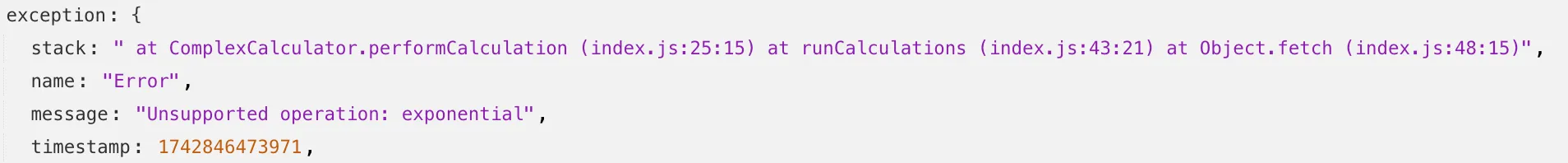

Source maps are now Generally Available (GA). You can now be uploaded with a maximum gzipped size of 15 MB. Previously, the maximum size limit was 15 MB uncompressed.

Source maps help map between the original source code and the transformed/minified code that gets deployed to production. By uploading your source map, you allow Cloudflare to map the stack trace from exceptions onto the original source code making it easier to debug.

With no source maps uploaded: notice how all the Javascript has been minified to one file, so the stack trace is missing information on file name, shows incorrect line numbers, and incorrectly references

jsinstead ofts.

With source maps uploaded: all methods reference the correct files and line numbers.

Uploading source maps and stack trace remapping happens out of band from the Worker execution, so source maps do not affect upload speed, bundle size, or cold starts. The remapped stack traces are accessible through Tail Workers, Workers Logs, and Workers Logpush.

To enable source maps, add the following to your Pages Function's or Worker's wrangler configuration:

{"upload_source_maps": true}upload_source_maps = true

-

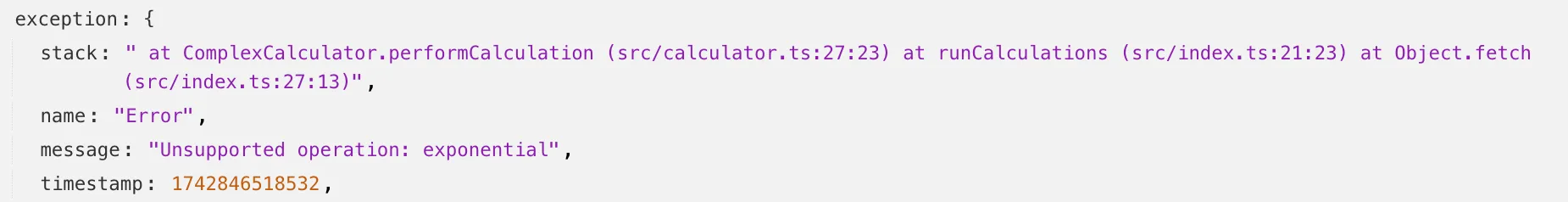

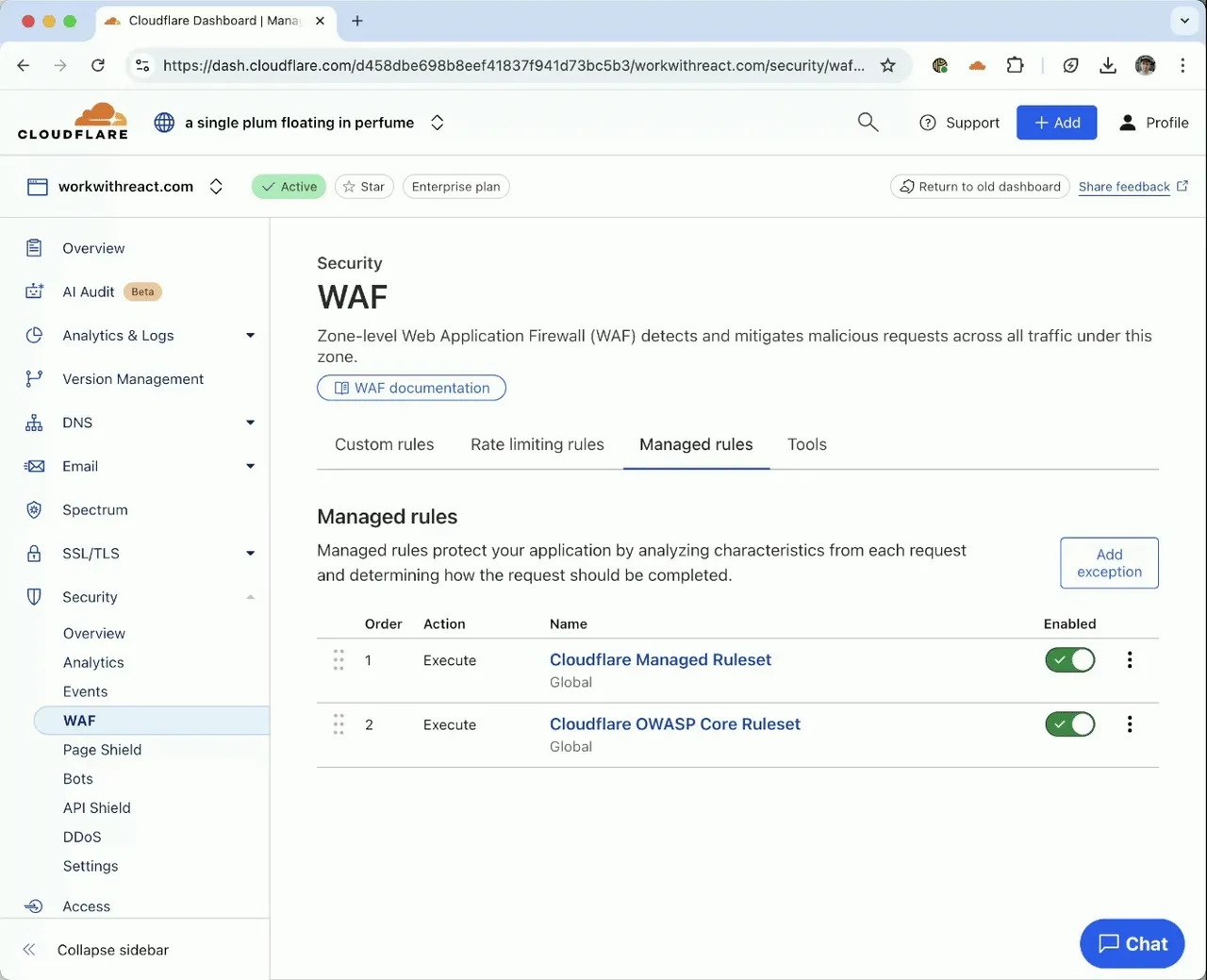

Update: Mon Mar 24th, 11PM UTC: Next.js has made further changes to address a smaller vulnerability introduced in the patches made to its middleware handling. Users should upgrade to Next.js versions

15.2.4,14.2.26,13.5.10or12.3.6. If you are unable to immediately upgrade or are running an older version of Next.js, you can enable the WAF rule described in this changelog as a mitigation.Update: Mon Mar 24th, 8PM UTC: Next.js has now backported the patch for this vulnerability ↗ to cover Next.js v12 and v13. Users on those versions will need to patch to

13.5.9and12.3.5(respectively) to mitigate the vulnerability.Update: Sat Mar 22nd, 4PM UTC: We have changed this WAF rule to opt-in only, as sites that use auth middleware with third-party auth vendors were observing failing requests.

We strongly recommend updating your version of Next.js (if eligible) to the patched versions, as your app will otherwise be vulnerable to an authentication bypass attack regardless of auth provider.

This rule is opt-in only for sites on the Pro plan or above in the WAF managed ruleset.

To enable the rule:

- Head to Security > WAF > Managed rules in the Cloudflare dashboard for the zone (website) you want to protect.

- Click the three dots next to Cloudflare Managed Ruleset and choose Edit

- Scroll down and choose Browse Rules

- Search for CVE-2025-29927 (ruleId:

34583778093748cc83ff7b38f472013e) - Change the Status to Enabled and the Action to Block. You can optionally set the rule to Log, to validate potential impact before enabling it. Log will not block requests.

- Click Next

- Scroll down and choose Save

This will enable the WAF rule and block requests with the

x-middleware-subrequestheader regardless of Next.js version.For users on the Free plan, or who want to define a more specific rule, you can create a Custom WAF rule to block requests with the

x-middleware-subrequestheader regardless of Next.js version.To create a custom rule:

- Head to Security > WAF > Custom rules in the Cloudflare dashboard for the zone (website) you want to protect.

- Give the rule a name - e.g.

next-js-CVE-2025-29927 - Set the matching parameters for the rule match any request where the

x-middleware-subrequestheaderexistsper the rule expression below.

Terminal window (len(http.request.headers["x-middleware-subrequest"]) > 0)- Set the action to 'block'. If you want to observe the impact before blocking requests, set the action to 'log' (and edit the rule later).

- Deploy the rule.

We've made a WAF (Web Application Firewall) rule available to all sites on Cloudflare to protect against the Next.js authentication bypass vulnerability ↗ (

CVE-2025-29927) published on March 21st, 2025.Note: This rule is not enabled by default as it blocked requests across sites for specific authentication middleware.

- This managed rule protects sites using Next.js on Workers and Pages, as well as sites using Cloudflare to protect Next.js applications hosted elsewhere.

- This rule has been made available (but not enabled by default) to all sites as part of our WAF Managed Ruleset and blocks requests that attempt to bypass authentication in Next.js applications.

- The vulnerability affects almost all Next.js versions, and has been fully patched in Next.js

14.2.26and15.2.4. Earlier, interim releases did not fully patch this vulnerability. - Users on older versions of Next.js (

11.1.4to13.5.6) did not originally have a patch available, but this the patch for this vulnerability and a subsequent additional patch have been backported to Next.js versions12.3.6and13.5.10as of Monday, March 24th. Users on Next.js v11 will need to deploy the stated workaround or enable the WAF rule.

The managed WAF rule mitigates this by blocking external user requests with the

x-middleware-subrequestheader regardless of Next.js version, but we recommend users using Next.js 14 and 15 upgrade to the patched versions of Next.js as an additional mitigation.

-

We're excited to introduce the Cloudflare Zero Trust Secure DNS Locations Write role, designed to provide DNS filtering customers with granular control over third-party access when configuring their Protective DNS (PDNS) solutions.

Many DNS filtering customers rely on external service partners to manage their DNS location endpoints. This role allows you to grant access to external parties to administer DNS locations without overprovisioning their permissions.

Secure DNS Location Requirements:

-

Mandate usage of Bring your own DNS resolver IP addresses ↗ if available on the account.

-

Require source network filtering for IPv4/IPv6/DoT endpoints; token authentication or source network filtering for the DoH endpoint.

You can assign the new role via Cloudflare Dashboard (

Manage Accounts > Members) or via API. For more information, refer to the Secure DNS Locations documentation ↗. -

-

We are excited to announce that AI Gateway now supports real-time AI interactions with the new Realtime WebSockets API.

This new capability allows developers to establish persistent, low-latency connections between their applications and AI models, enabling natural, real-time conversational AI experiences, including speech-to-speech interactions.

The Realtime WebSockets API works with the OpenAI Realtime API ↗, Google Gemini Live API ↗, and supports real-time text and speech interactions with models from Cartesia ↗, and ElevenLabs ↗.

Here's how you can connect AI Gateway to OpenAI's Realtime API ↗ using WebSockets:

OpenAI Realtime API example import WebSocket from "ws";const url ="wss://gateway.ai.cloudflare.com/v1/<account_id>/<gateway>/openai?model=gpt-4o-realtime-preview-2024-12-17";const ws = new WebSocket(url, {headers: {"cf-aig-authorization": process.env.CLOUDFLARE_API_KEY,Authorization: "Bearer " + process.env.OPENAI_API_KEY,"OpenAI-Beta": "realtime=v1",},});ws.on("open", () => console.log("Connected to server."));ws.on("message", (message) => console.log(JSON.parse(message.toString())));ws.send(JSON.stringify({type: "response.create",response: { modalities: ["text"], instructions: "Tell me a joke" },}),);Get started by checking out the Realtime WebSockets API documentation.

-

Document conversion plays an important role when designing and developing AI applications and agents. Workers AI now provides the

toMarkdownutility method that developers can use to for quick, easy, and convenient conversion and summary of documents in multiple formats to Markdown language.You can call this new tool using a binding by calling

env.AI.toMarkdown()or the using the REST API endpoint.In this example, we fetch a PDF document and an image from R2 and feed them both to

env.AI.toMarkdown(). The result is a list of converted documents. Workers AI models are used automatically to detect and summarize the image.import { Env } from "./env";export default {async fetch(request: Request, env: Env, ctx: ExecutionContext) {// https://pub-979cb28270cc461d94bc8a169d8f389d.r2.dev/somatosensory.pdfconst pdf = await env.R2.get("somatosensory.pdf");// https://pub-979cb28270cc461d94bc8a169d8f389d.r2.dev/cat.jpegconst cat = await env.R2.get("cat.jpeg");return Response.json(await env.AI.toMarkdown([{name: "somatosensory.pdf",blob: new Blob([await pdf.arrayBuffer()], {type: "application/octet-stream",}),},{name: "cat.jpeg",blob: new Blob([await cat.arrayBuffer()], {type: "application/octet-stream",}),},]),);},};This is the result:

[{"name": "somatosensory.pdf","mimeType": "application/pdf","format": "markdown","tokens": 0,"data": "# somatosensory.pdf\n## Metadata\n- PDFFormatVersion=1.4\n- IsLinearized=false\n- IsAcroFormPresent=false\n- IsXFAPresent=false\n- IsCollectionPresent=false\n- IsSignaturesPresent=false\n- Producer=Prince 20150210 (www.princexml.com)\n- Title=Anatomy of the Somatosensory System\n\n## Contents\n### Page 1\nThis is a sample document to showcase..."},{"name": "cat.jpeg","mimeType": "image/jpeg","format": "markdown","tokens": 0,"data": "The image is a close-up photograph of Grumpy Cat, a cat with a distinctive grumpy expression and piercing blue eyes. The cat has a brown face with a white stripe down its nose, and its ears are pointed upright. Its fur is light brown and darker around the face, with a pink nose and mouth. The cat's eyes are blue and slanted downward, giving it a perpetually grumpy appearance. The background is blurred, but it appears to be a dark brown color. Overall, the image is a humorous and iconic representation of the popular internet meme character, Grumpy Cat. The cat's facial expression and posture convey a sense of displeasure or annoyance, making it a relatable and entertaining image for many people."}]See Markdown Conversion for more information on supported formats, REST API and pricing.

-

📝 We've renamed the Agents package to

agents!If you've already been building with the Agents SDK, you can update your dependencies to use the new package name, and replace references to

agents-sdkwithagents:Terminal window # Install the new packagenpm i agentsTerminal window # Remove the old (deprecated) packagenpm uninstall agents-sdk# Find instances of the old package name in your codebasegrep -r 'agents-sdk' .# Replace instances of the old package name with the new one# (or use find-replace in your editor)sed -i 's/agents-sdk/agents/g' $(grep -rl 'agents-sdk' .)All future updates will be pushed to the new

agentspackage, and the older package has been marked as deprecated.We've added a number of big new features to the Agents SDK over the past few weeks, including:

- You can now set

cors: truewhen usingrouteAgentRequestto return permissive default CORS headers to Agent responses. - The regular client now syncs state on the agent (just like the React version).

useAgentChatbug fixes for passing headers/credentials, including properly clearing cache on unmount.- Experimental

/schedulemodule with a prompt/schema for adding scheduling to your app (with evals!). - Changed the internal

zodschema to be compatible with the limitations of Google's Gemini models by removing the discriminated union, allowing you to use Gemini models with the scheduling API.

We've also fixed a number of bugs with state synchronization and the React hooks.

// via https://github.com/cloudflare/agents/tree/main/examples/cross-domainexport default {async fetch(request, env) {return (// Set { cors: true } to enable CORS headers.(await routeAgentRequest(request, env, { cors: true })) ||new Response("Not found", { status: 404 }));},};// via https://github.com/cloudflare/agents/tree/main/examples/cross-domainexport default {async fetch(request: Request, env: Env) {return (// Set { cors: true } to enable CORS headers.(await routeAgentRequest(request, env, { cors: true })) ||new Response("Not found", { status: 404 }));},} satisfies ExportedHandler<Env>;We've added a new

@unstable_callable()decorator for defining methods that can be called directly from clients. This allows you call methods from within your client code: you can call methods (with arguments) and get native JavaScript objects back.// server.tsimport { unstable_callable, Agent } from "agents";export class Rpc extends Agent {// Use the decorator to define a callable method@unstable_callable({description: "rpc test",})async getHistory() {return this.sql`SELECT * FROM history ORDER BY created_at DESC LIMIT 10`;}}// server.tsimport { unstable_callable, Agent, type StreamingResponse } from "agents";import type { Env } from "../server";export class Rpc extends Agent<Env> {// Use the decorator to define a callable method@unstable_callable({description: "rpc test",})async getHistory() {return this.sql`SELECT * FROM history ORDER BY created_at DESC LIMIT 10`;}}We've fixed a number of small bugs in the

agents-starter↗ project — a real-time, chat-based example application with tool-calling & human-in-the-loop built using the Agents SDK. The starter has also been upgraded to use the latest wrangler v4 release.If you're new to Agents, you can install and run the

agents-starterproject in two commands:Terminal window # Install it$ npm create cloudflare@latest agents-starter -- --template="cloudflare/agents-starter"# Run it$ npm run startYou can use the starter as a template for your own Agents projects: open up

src/server.tsandsrc/client.tsxto see how the Agents SDK is used.We've heard your feedback on the Agents SDK documentation, and we're shipping more API reference material and usage examples, including:

- Expanded API reference documentation, covering the methods and properties exposed by the Agents SDK, as well as more usage examples.

- More Client API documentation that documents

useAgent,useAgentChatand the new@unstable_callableRPC decorator exposed by the SDK. - New documentation on how to call agents and (optionally) authenticate clients before they connect to your Agents.

Note that the Agents SDK is continually growing: the type definitions included in the SDK will always include the latest APIs exposed by the

agentspackage.If you're still wondering what Agents are, read our blog on building AI Agents on Cloudflare ↗ and/or visit the Agents documentation to learn more.

- You can now set

-

Radar has expanded its security insights, providing visibility into aggregate trends in authentication requests, including the detection of leaked credentials through leaked credentials detection scans.

We have now introduced the following endpoints:

summary: Retrieves summaries of HTTP authentication requests distribution across two different dimensions.timeseries_group: Retrieves timeseries data for HTTP authentication requests distribution across two different dimensions.

The following dimensions are available, displaying the distribution of HTTP authentication requests based on:

compromised: Credential status (clean vs. compromised).bot_class: Bot class (human vs. bot).

Dive deeper into leaked credential detection in this blog post ↗ and learn more about the expanded Radar security insights in our blog post ↗.

-

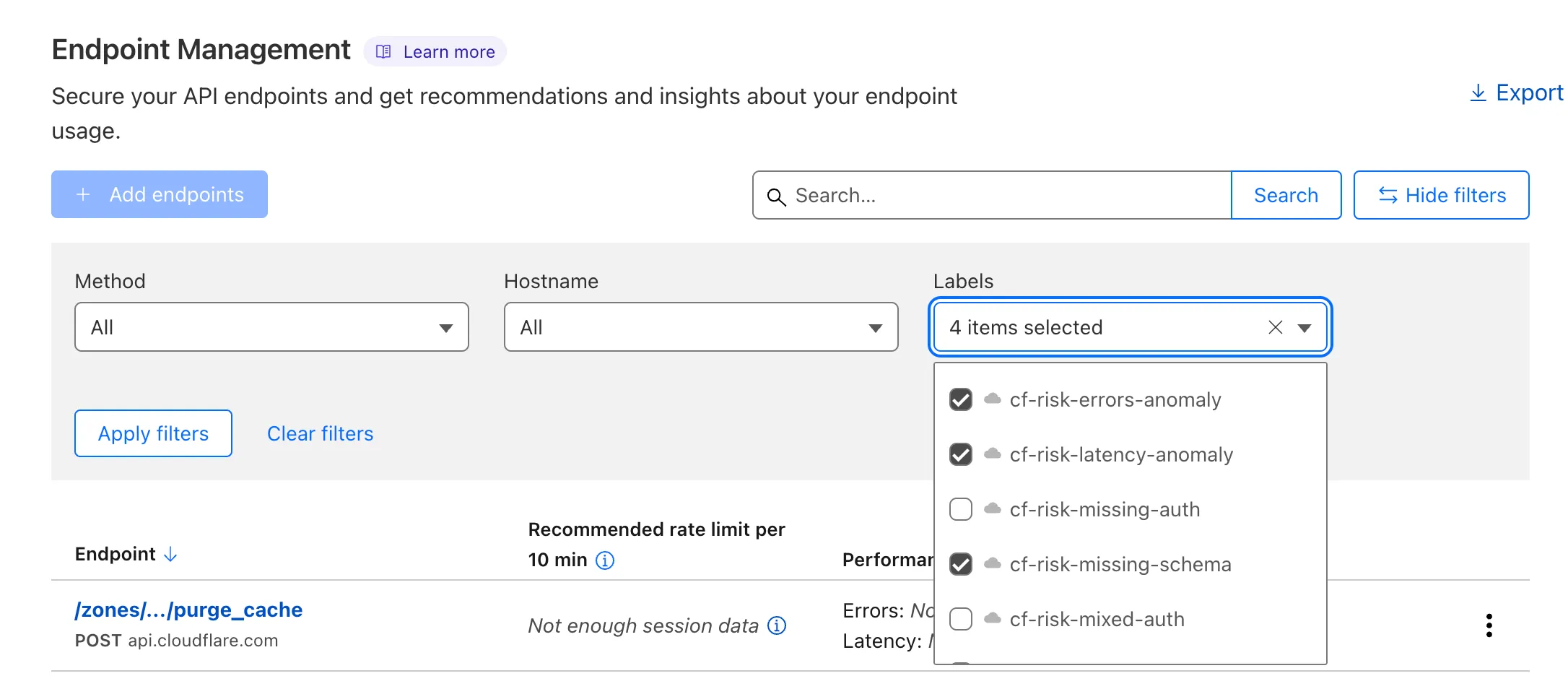

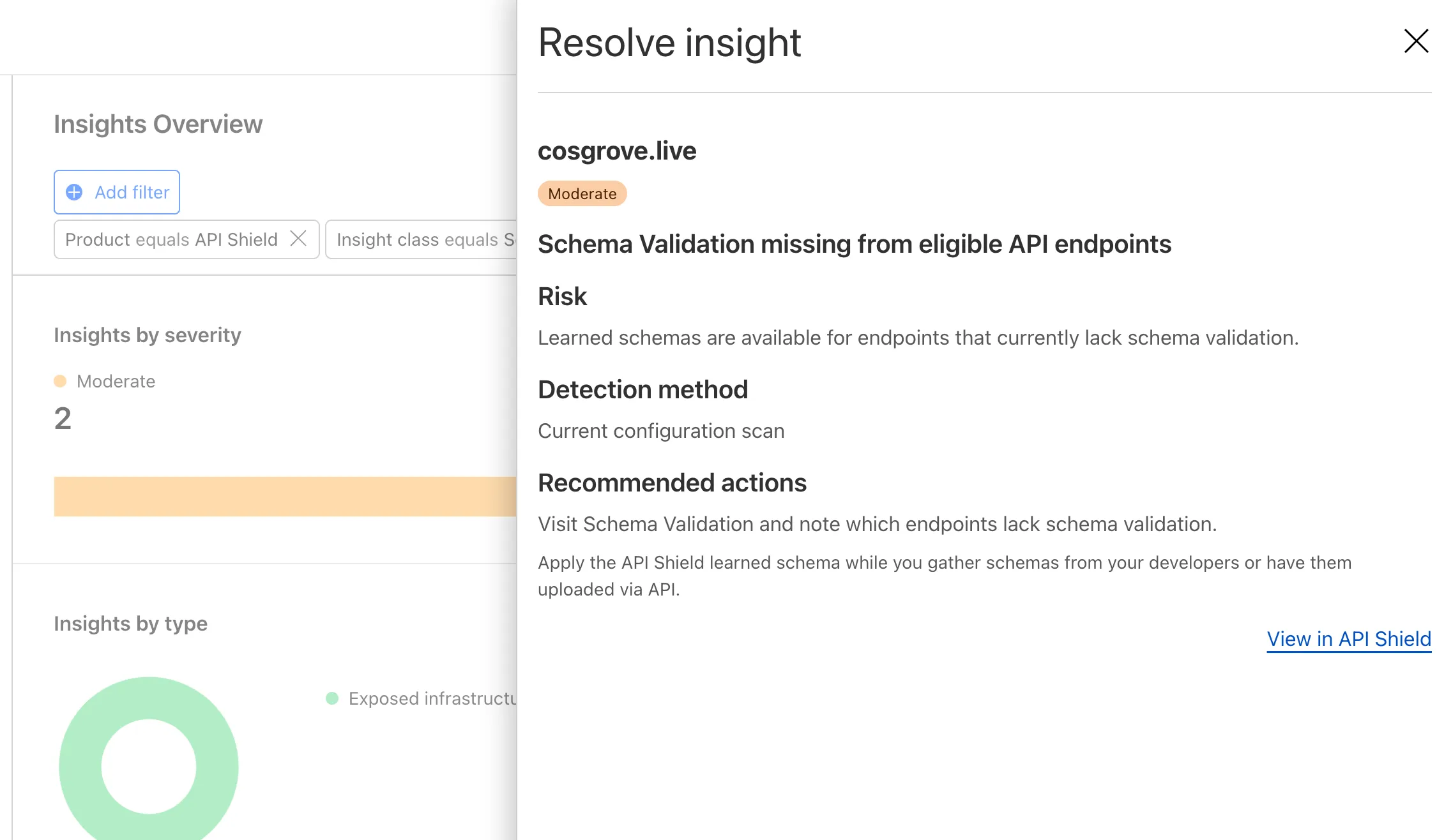

Now, API Shield automatically labels your API inventory with API-specific risks so that you can track and manage risks to your APIs.

View these risks in Endpoint Management by label:

...or in Security Center Insights:

API Shield will scan for risks on your API inventory daily. Here are the new risks we're scanning for and automatically labelling:

- cf-risk-sensitive: applied if the customer is subscribed to the sensitive data detection ruleset and the WAF detects sensitive data returned on an endpoint in the last seven days.

- cf-risk-missing-auth: applied if the customer has configured a session ID and no successful requests to the endpoint contain the session ID.

- cf-risk-mixed-auth: applied if the customer has configured a session ID and some successful requests to the endpoint contain the session ID while some lack the session ID.

- cf-risk-missing-schema: added when a learned schema is available for an endpoint that has no active schema.

- cf-risk-error-anomaly: added when an endpoint experiences a recent increase in response errors over the last 24 hours.

- cf-risk-latency-anomaly: added when an endpoint experiences a recent increase in response latency over the last 24 hours.

- cf-risk-size-anomaly: added when an endpoint experiences a spike in response body size over the last 24 hours.

In addition, API Shield has two new 'beta' scans for Broken Object Level Authorization (BOLA) attacks. If you're in the beta, you will see the following two labels when API Shield suspects an endpoint is suffering from a BOLA vulnerability:

- cf-risk-bola-enumeration: added when an endpoint experiences successful responses with drastic differences in the number of unique elements requested by different user sessions.

- cf-risk-bola-pollution: added when an endpoint experiences successful responses where parameters are found in multiple places in the request.

We are currently accepting more customers into our beta. Contact your account team if you are interested in BOLA attack detection for your API.

Refer to the blog post ↗ for more information about Cloudflare's expanded posture management capabilities.

-

Workers AI is excited to add 4 new models to the catalog, including 2 brand new classes of models with a text-to-speech and reranker model. Introducing:

- @cf/baai/bge-m3 - a multi-lingual embeddings model that supports over 100 languages. It can also simultaneously perform dense retrieval, multi-vector retrieval, and sparse retrieval, with the ability to process inputs of different granularities.

- @cf/baai/bge-reranker-base - our first reranker model! Rerankers are a type of text classification model that takes a query and context, and outputs a similarity score between the two. When used in RAG systems, you can use a reranker after the initial vector search to find the most relevant documents to return to a user by reranking the outputs.

- @cf/openai/whisper-large-v3-turbo - a faster, more accurate speech-to-text model. This model was added earlier but is graduating out of beta with pricing included today.

- @cf/myshell-ai/melotts - our first text-to-speech model that allows users to generate an MP3 with voice audio from inputted text.

Pricing is available for each of these models on the Workers AI pricing page.

This docs update includes a few minor bug fixes to the model schema for llama-guard, llama-3.2-1b, which you can review on the product changelog.

Try it out and let us know what you think! Stay tuned for more models in the coming days.

-

You can now access bindings from anywhere in your Worker by importing the

envobject fromcloudflare:workers.Previously,

envcould only be accessed during a request. This meant that bindings could not be used in the top-level context of a Worker.Now, you can import

envand access bindings such as secrets or environment variables in the initial setup for your Worker:import { env } from "cloudflare:workers";import ApiClient from "example-api-client";// API_KEY and LOG_LEVEL now usable in top-level scopeconst apiClient = ApiClient.new({ apiKey: env.API_KEY });const LOG_LEVEL = env.LOG_LEVEL || "info";export default {fetch(req) {// you can use apiClient or LOG_LEVEL, configured before any request is handled},};Additionally,